Professional-Cloud-Architect Free Update With 100% Exam Passing Guarantee [2021]

[Oct-2021] Verified Google Exam Dumps with Professional-Cloud-Architect Exam Study Guide

NEW QUESTION 121

You want to enable your running Google Container Engine cluster to scale as demand for your application changes.

What should you do?

- A. Create a new Container Engine cluster with the following command:

gcloud alpha container clusters create mycluster --enable-autocaling --min-nodes=1 --max-nodes=10 and redeploy your application. - B. Add a tag to the instances in the cluster with the following command:

gcloud compute instances add-tags INSTANCE --tags enable --autoscaling max-nodes-10 - C. Update the existing Container Engine cluster with the following command:

gcloud alpha container clusters update mycluster --enable-autoscaling --min-nodes=1 --max-nodes=10 - D. Add additional nodes to your Container Engine cluster using the following command:

gcloud container clusters resize CLUSTER_NAME --size 10

Answer: B

Explanation:

https://cloud.google.com/kubernetes-engine/docs/concepts/cluster-autoscaler Cluster autoscaling

--enable-autoscaling

Enables autoscaling for a node pool.

Enables autoscaling in the node pool specified by --node-pool or the default node pool if --node-pool is not provided.

Where:

--max-nodes=MAX_NODES

Maximum number of nodes in the node pool.

Maximum number of nodes to which the node pool specified by --node-pool (or default node pool if unspecified) can scale.

Incorrect Answers:

C, D: Warning: Do not use Alpha Clusters or alpha features for production workloads.

Note: You can experiment with Kubernetes alpha features by creating an alpha cluster. Alpha clusters are short-lived clusters that run stable Kubernetes releases with all Kubernetes APIs and features enabled. Alpha clusters are designed for advanced users and early adopters to experiment with workloads that take advantage of new features before those features are production-ready. You can use Alpha clusters just like normal Kubernetes Engine clusters.

References:

https://cloud.google.com/sdk/gcloud/reference/container/clusters/create

NEW QUESTION 122

Your company wants you to build a highly reliable web application with a few public APIs as the backend. You don't expect a lot of user traffic, but traffic could spike occasionally. You want to leverage Cloud Load Balancing, and the solution must be cost-effective for users. What should you do?

- A. Store static content such as HTML and images in a Cloud Storage bucket. Host the APIs on a zonal Google Kubernetes Engine cluster with worker nodes in multiple zones, and save the user data in Cloud Spanner.

- B. Store static content such as HTML and images in a Cloud Storage bucket. Use Cloud Functions to host the APIs and save the user data in Firestore.

- C. Store static content such as HTML and images in Cloud CDN. Use Cloud Run to host the APIs and save the user data in Cloud SQL.

- D. Store static content such as HTML and images in Cloud CDN. Host the APIs on App Engine and store the user data in Cloud SQL.

Answer: B

NEW QUESTION 123

Case Study: 3 - JencoMart Case Study

Company Overview

JencoMart is a global retailer with over 10,000 stores in 16 countries. The stores carry a range of goods, such as groceries, tires, and jewelry. One of the company's core values is excellent customer service. In addition, they recently introduced an environmental policy to reduce their carbon output by 50% over the next 5 years.

Company Background

JencoMart started as a general store in 1931, and has grown into one of the world's leading brands known for great value and customer service. Over time, the company transitioned from only physical stores to a stores and online hybrid model, with 25% of sales online. Currently, JencoMart has little presence in Asia, but considers that market key for future growth.

Solution Concept

JencoMart wants to migrate several critical applications to the cloud but has not completed a technical review to determine their suitability for the cloud and the engineering required for migration. They currently host all of these applications on infrastructure that is at its end of life and is no longer supported.

Existing Technical Environment

JencoMart hosts all of its applications in 4 data centers: 3 in North American and 1 in Europe, most applications are dual-homed.

JencoMart understands the dependencies and resource usage metrics of their on-premises architecture.

Application Customer loyalty portal

LAMP (Linux, Apache, MySQL and PHP) application served from the two JencoMart-owned U.S.

data centers.

Database

* Oracle Database stores user profiles

* PostgreSQL database stores user credentials

-homed in US West

Authenticates all users

Compute

* 30 machines in US West Coast, each machine has:

core CPUs

* 20 machines in US East Coast, each machine has:

-core CPU

Storage

* Access to shared 100 TB SAN in each location

* Tape backup every week

Business Requirements

* Optimize for capacity during peak periods and value during off-peak periods

* Guarantee service availably and support

* Reduce on-premises footprint and associated financial and environmental impact.

* Move to outsourcing model to avoid large upfront costs associated with infrastructure purchase

* Expand services into Asia.

Technical Requirements

* Assess key application for cloud suitability.

* Modify application for the cloud.

* Move applications to a new infrastructure.

* Leverage managed services wherever feasible

* Sunset 20% of capacity in existing data centers

* Decrease latency in Asia

CEO Statement

JencoMart will continue to develop personal relationships with our customers as more people access the web. The future of our retail business is in the global market and the connection between online and in-store experiences. As a large global company, we also have a responsibility to the environment through 'green' initiatives and polices.

CTO Statement

The challenges of operating data centers prevents focus on key technologies critical to our long- term success. Migrating our data services to a public cloud infrastructure will allow us to focus on big data and machine learning to improve our service customers.

CFO Statement

Since its founding JencoMart has invested heavily in our data services infrastructure. However, because of changing market trends, we need to outsource our infrastructure to ensure our long- term success. This model will allow us to respond to increasing customer demand during peak and reduce costs.

For this question, refer to the JencoMart case study

A few days after JencoMart migrates the user credentials database to Google Cloud Platform and shuts down the old server, the new database server stops responding to SSH connections. It is still serving database requests to the application servers correctly. What three steps should you take to diagnose the problem? Choose 3 answers

- A. Connect the machine to another network with very simple firewall rules and investigate.

- B. Take a snapshot of the disk and connect to a new machine to investigate.

- C. Delete the instance, attach the disk to a new VM, and investigate.

- D. Print the Serial Console output for the instance for troubleshooting, activate the interactive console, and investigate.

- E. Delete the virtual machine (VM) and disks and create a new one.

- F. Check inbound firewall rules for the network the machine is connected to.

Answer: B,D,F

Explanation:

D: Handling "Unable to connect on port 22" error message

Possible causes include:

There is no firewall rule allowing SSH access on the port. SSH access on port 22 is enabled on

all Compute Engine instances by default. If you have disabled access, SSH from the Browser will not work. If you run sshd on a port other than 22, you need to enable the access to that port with a custom firewall rule.

The firewall rule allowing SSH access is enabled, but is not configured to allow connections

from GCP Console services. Source IP addresses for browser-based SSH sessions are dynamically allocated by GCP Console and can vary from session to session.

F: Handling "Could not connect, retrying..." error

You can verify that the daemon is running by navigating to the serial console output page and looking for output lines prefixed with the accounts-from-metadata: string. If you are using a standard image but you do not see these output prefixes in the serial console output, the daemon might be stopped. Reboot the instance to restart the daemon.

References:

https://cloud.google.com/compute/docs/ssh-in-browser

https://cloud.google.com/compute/docs/ssh-in-browser

NEW QUESTION 124

You need to evaluate your team readiness for a new GCP project. You must perform the evaluation and create a skills gap plan incorporates the business goal of cost optimization. Your team has deployed two GCP projects successfully to date. What should you do?

- A. Allocate budget for team training. Set a deadline for the new GCP project.

- B. Allocate budget to hire skilled external consultants. Set a deadline for the new GCP project.

- C. Allocate budget to hire skilled external consultants. Create a roadmap for your team to achieve Google Cloud certification based on job role.

- D. Allocate budget for team training. Create a roadmap for your team to achieve Google Cloud certification based on job role.

Answer: A

NEW QUESTION 125

Case Study: 5 - Dress4win

Company Overview

Dress4win is a web-based company that helps their users organize and manage their personal wardrobe using a website and mobile application. The company also cultivates an active social network that connects their users with designers and retailers. They monetize their services through advertising, e-commerce, referrals, and a freemium app model. The application has grown from a few servers in the founder's garage to several hundred servers and appliances in a collocated data center. However, the capacity of their infrastructure is now insufficient for the application's rapid growth. Because of this growth and the company's desire to innovate faster.

Dress4Win is committing to a full migration to a public cloud.

Solution Concept

For the first phase of their migration to the cloud, Dress4win is moving their development and test environments. They are also building a disaster recovery site, because their current infrastructure is at a single location. They are not sure which components of their architecture they can migrate as is and which components they need to change before migrating them.

Existing Technical Environment

The Dress4win application is served out of a single data center location. All servers run Ubuntu LTS v16.04.

Databases:

MySQL. 1 server for user data, inventory, static data:

* - MySQL 5.8

- 8 core CPUs

- 128 GB of RAM

- 2x 5 TB HDD (RAID 1)

Redis 3 server cluster for metadata, social graph, caching. Each server is:

* - Redis 3.2

- 4 core CPUs

- 32GB of RAM

Compute:

40 Web Application servers providing micro-services based APIs and static content.

* - Tomcat - Java

- Nginx

- 4 core CPUs

- 32 GB of RAM

20 Apache Hadoop/Spark servers:

* - Data analysis

- Real-time trending calculations

- 8 core CPUS

- 128 GB of RAM

- 4x 5 TB HDD (RAID 1)

3 RabbitMQ servers for messaging, social notifications, and events:

* - 8 core CPUs

- 32GB of RAM

Miscellaneous servers:

* - Jenkins, monitoring, bastion hosts, security scanners

- 8 core CPUs

- 32GB of RAM

Storage appliances:

iSCSI for VM hosts

* Fiber channel SAN - MySQL databases

* - 1 PB total storage; 400 TB available

NAS - image storage, logs, backups

* - 100 TB total storage; 35 TB available

Business Requirements

Build a reliable and reproducible environment with scaled parity of production.

* Improve security by defining and adhering to a set of security and Identity and Access

* Management (IAM) best practices for cloud.

Improve business agility and speed of innovation through rapid provisioning of new resources.

* Analyze and optimize architecture for performance in the cloud.

* Technical Requirements

Easily create non-production environment in the cloud.

* Implement an automation framework for provisioning resources in cloud.

* Implement a continuous deployment process for deploying applications to the on-premises

* datacenter or cloud.

Support failover of the production environment to cloud during an emergency.

* Encrypt data on the wire and at rest.

* Support multiple private connections between the production data center and cloud

* environment.

Executive Statement

Our investors are concerned about our ability to scale and contain costs with our current infrastructure. They are also concerned that a competitor could use a public cloud platform to offset their up-front investment and free them to focus on developing better features. Our traffic patterns are highest in the mornings and weekend evenings; during other times, 80% of our capacity is sitting idle.

Our capital expenditure is now exceeding our quarterly projections. Migrating to the cloud will likely cause an initial increase in spending, but we expect to fully transition before our next hardware refresh cycle. Our total cost of ownership (TCO) analysis over the next 5 years for a public cloud strategy achieves a cost reduction between 30% and 50% over our current model.

For this question, refer to the Dress4Win case study. Which of the compute services should be migrated as -is and would still be an optimized architecture for performance in the cloud?

- A. Web applications deployed using App Engine standard environment

- B. Jenkins, monitoring, bastion hosts, security scanners services deployed on custom machine types

- C. Hadoop/Spark deployed using Cloud Dataproc Regional in High Availability mode

- D. RabbitMQ deployed using an unmanaged instance group

Answer: A

NEW QUESTION 126

You are tasked with building an online analytical processing (OLAP) marketing analytics and reporting tool.

This requires a relational database that can operate on hundreds of terabytes of data. What is the Google recommended tool for such applications?

- A. Cloud Spanner, because it is globally distributed

- B. Cloud Firestore, because it offers real-time synchronization across devices

- C. Cloud SQL, because it is a fully managed relational database

- D. BigQuery, because it is designed for large-scale processing of tabular data

Answer: A

Explanation:

Reference: https://cloud.google.com/files/BigQueryTechnicalWP.pdf

NEW QUESTION 127

For this question, refer to the Dress4Win case study.

The Dress4Win security team has disabled external SSH access into production virtual machines (VMs) on Google Cloud Platform (GCP). The operations team needs to remotely manage the VMs, build and push Docker containers, and manage Google Cloud Storage objects. What can they do?

- A. Have the development team build an API service that allows the operations team to execute specific remote procedure calls to accomplish their tasks.

- B. Develop a new access request process that grants temporary SSH access to cloud VMs when an operations engineer needs to perform a task.

- C. Configure a VPN connection to GCP to allow SSH access to the cloud VMs.

- D. Grant the operations engineers access to use Google Cloud Shell.

Answer: D

NEW QUESTION 128

A development team at your company has created a dockerized HTTPS web application. You need to deploy the application on Google Kubernetes Engine (GKE) and make sure that the application scales automatically.

How should you deploy to GKE?

- A. Use the Horizontal Pod Autoscaler and enable cluster autoscaling on the Kubernetes cluster. Use a Service resource of type LoadBalancer to load-balance the HTTPS traffic.

- B. Enable autoscaling on the Compute Engine instance group. Use a Service resource of type LoadBalancer to load-balance the HTTPS traffic.

- C. Enable autoscaling on the Compute Engine instance group. Use an Ingress resource to load-balance the HTTPS traffic.

- D. Use the Horizontal Pod Autoscaler and enable cluster autoscaling. Use an Ingress resource to load-balance the HTTPS traffic.

Answer: A

Explanation:

Explanation/Reference: https://cloud.google.com/kubernetes-engine/docs/how-to/cluster-autoscaler

NEW QUESTION 129

You have an application deployed on Google Kubernetes Engine using a Deployment named echo-deployment.

The deployment is exposed using a Service called echo-service. You need to perform an update to the application with minimal downtime to the application. What should you do?

- A. Update the service yaml file which the new container image. Use kubectl delete service/echo- service and kubectl create -f <yaml-file>

- B. Use kubectl set image deployment/echo-deployment <new-image>

- C. Use the rolling update functionality of the Instance Group behind the Kubernetes cluster

- D. Update the deployment yaml file with the new container image. Use kubectl delete deployment/ echo-deployment and kubectl create -f <yaml-file>

Answer: B

NEW QUESTION 130

You analyzed TerramEarth's business requirement to reduce downtime, and found that they can achieve a majority of time saving by reducing customer's wait time for parts. You decided to focus on reduction of the

3 weeks aggregate reporting time.

Which modifications to the company's processes should you recommend?

- A. Increase fleet cellular connectivity to 80%, migrate from FTP to streaming transport, and develop machine learning analysis of metrics

- B. Migrate from FTP to SFTP transport, develop machine learning analysis of metrics, and increase dealer local inventory by a fixed factor

- C. Migrate from FTP to streaming transport, migrate from CSV to binary format, and develop machine learning analysis of metrics

- D. Migrate from CSV to binary format, migrate from FTP to SFTP transport, and develop machine learning analysis of metrics

Answer: A

Explanation:

The Avro binary format is the preferred format for loading compressed data. Avro data is faster to load because the data can be read in parallel, even when the data blocks are compressed.

Cloud Storage supports streaming transfers with the gsutil tool or boto library, based on HTTP chunked transfer encoding. Streaming data lets you stream data to and from your Cloud Storage account as soon as it becomes available without requiring that the data be first saved to a separate file. Streaming transfers are useful if you have a process that generates data and you do not want to buffer it locally before uploading it, or if you want to send the result from a computational pipeline directly into Cloud Storage.

Reference: https://cloud.google.com/storage/docs/streaming

https://cloud.google.com/bigquery/docs/loading-data

NEW QUESTION 131

Your company plans to migrate a multi-petabyte data set to the cloud. The data set must be available 24hrs a day. Your business analysts have experience only with using a SQL interface.

How should you store the data to optimize it for ease of analysis?

- A. Load data into Google BigQuery.

- B. Put flat files into Google Cloud Storage.

- C. Insert data into Google Cloud SQL.

- D. Stream data into Google Cloud Datastore.

Answer: A

Explanation:

Google Big Query is for multi peta byte storage , HA(High availability) which means 24 hours, SQL interface .

https://medium.com/google-cloud/the-12-components-of-google-bigquery-c2b49829a7c7

https://cloud.google.com/solutions/bigquery-data-warehouse

https://cloud.google.com/bigquery/

NEW QUESTION 132

For this question, refer to the Dress4Win case study.

Dress4Win has configured a new uptime check with Google Stackdriver for several of their legacy services.

The Stackdriver dashboard is not reporting the services as healthy. What should they do?

- A. In the Cloud Platform Console download the list of the uptime servers' IP addresses and create an inbound firewall rule

- B. Configure their legacy web servers to allow requests that contain user-Agent HTTP header when the value matches GoogleStackdriverMonitoring- UptimeChecks (https://cloud.google.com/monitoring)

- C. Install the Stackdriver agent on all of the legacy web servers.

- D. Configure their load balancer to pass through the User-Agent HTTP header when the value matches GoogleStackdriverMonitoring-UptimeChecks (https://cloud.google.com/monitoring)

Answer: A

NEW QUESTION 133

Case Study: 3 - JencoMart Case Study

Company Overview

JencoMart is a global retailer with over 10,000 stores in 16 countries. The stores carry a range of goods, such as groceries, tires, and jewelry. One of the company's core values is excellent customer service. In addition, they recently introduced an environmental policy to reduce their carbon output by 50% over the next 5 years.

Company Background

JencoMart started as a general store in 1931, and has grown into one of the world's leading brands known for great value and customer service. Over time, the company transitioned from only physical stores to a stores and online hybrid model, with 25% of sales online. Currently, JencoMart has little presence in Asia, but considers that market key for future growth.

Solution Concept

JencoMart wants to migrate several critical applications to the cloud but has not completed a technical review to determine their suitability for the cloud and the engineering required for migration. They currently host all of these applications on infrastructure that is at its end of life and is no longer supported.

Existing Technical Environment

JencoMart hosts all of its applications in 4 data centers: 3 in North American and 1 in Europe, most applications are dual-homed.

JencoMart understands the dependencies and resource usage metrics of their on-premises architecture.

Application Customer loyalty portal

LAMP (Linux, Apache, MySQL and PHP) application served from the two JencoMart-owned U.S.

data centers.

Database

* Oracle Database stores user profiles

* PostgreSQL database stores user credentials

-homed in US West

Authenticates all users

Compute

* 30 machines in US West Coast, each machine has:

* 20 machines in US East Coast, each machine has:

-core CPU

Storage

* Access to shared 100 TB SAN in each location

* Tape backup every week

Business Requirements

* Optimize for capacity during peak periods and value during off-peak periods

* Guarantee service availably and support

* Reduce on-premises footprint and associated financial and environmental impact.

* Move to outsourcing model to avoid large upfront costs associated with infrastructure purchase

* Expand services into Asia.

Technical Requirements

* Assess key application for cloud suitability.

* Modify application for the cloud.

* Move applications to a new infrastructure.

* Leverage managed services wherever feasible

* Sunset 20% of capacity in existing data centers

* Decrease latency in Asia

CEO Statement

JencoMart will continue to develop personal relationships with our customers as more people access the web. The future of our retail business is in the global market and the connection between online and in-store experiences. As a large global company, we also have a responsibility to the environment through 'green' initiatives and polices.

CTO Statement

The challenges of operating data centers prevents focus on key technologies critical to our long- term success. Migrating our data services to a public cloud infrastructure will allow us to focus on big data and machine learning to improve our service customers.

CFO Statement

Since its founding JencoMart has invested heavily in our data services infrastructure. However, because of changing market trends, we need to outsource our infrastructure to ensure our long- term success. This model will allow us to respond to increasing customer demand during peak and reduce costs.

For this question, refer to the JencoMart case study.

JencoMart wants to move their User Profiles database to Google Cloud Platform. Which Google Database should they use?

- A. Google Cloud SQL

- B. Google Cloud Datastore

- C. Google BigQuery

- D. Cloud Spanner

Answer: B

Explanation:

Common workloads for Google Cloud Datastore:

User profiles

* Product catalogs

* Game state

* References: https://cloud.google.com/storage-options/

https://cloud.google.com/datastore/docs/concepts/overview

NEW QUESTION 134

Your company is migrating its on-premises data center into the cloud. As part of the migration, you want to integrate Kubernetes Engine for workload orchestration. Parts of your architecture must also be PCI DSS- compliant. Which of the following is most accurate?

- A. Kubernetes Engine and GCP provide the tools you need to build a PCI DSS-compliant environment.

- B. All Google Cloud services are usable because Google Cloud Platform is certified PCI-compliant.

- C. App Engine is the only compute platform on GCP that is certified for PCI DSS hosting.

- D. Kubernetes Engine cannot be used under PCI DSS because it is considered shared hosting.

Answer: A

NEW QUESTION 135

TerramEarth's CTO wants to use the raw data from connected vehicles to help identify approximately when a vehicle in the field will have a catastrophic failure. You want to allow analysts to centrally query the vehicle data.

Which architecture should you recommend?

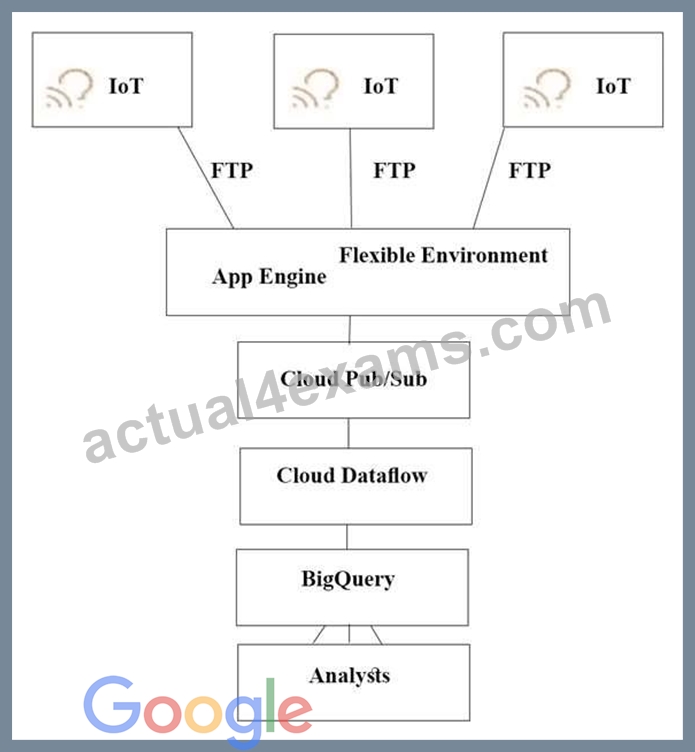

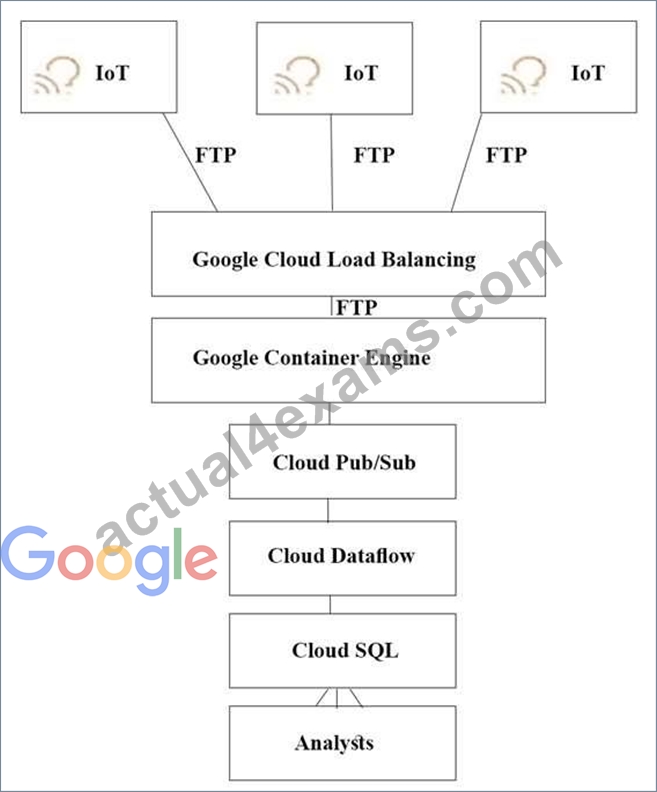

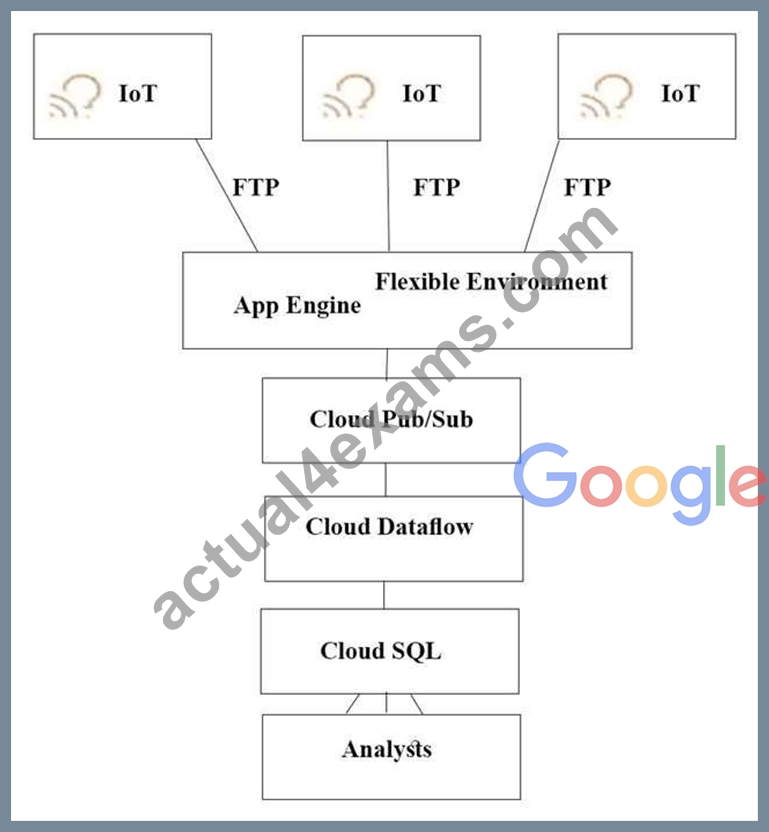

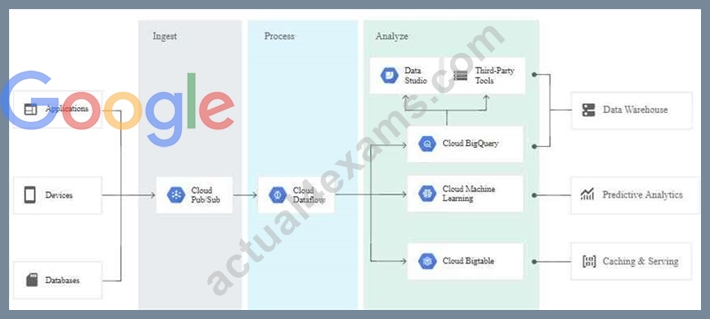

- A.

- B.

- C.

- D.

Answer: A

Explanation:

The push endpoint can be a load balancer.

A container cluster can be used.

Cloud Pub/Sub for Stream Analytics

Reference: https://cloud.google.com/pubsub/

https://cloud.google.com/solutions/iot/

https://cloud.google.com/solutions/designing-connected-vehicle-platform

https://cloud.google.com/solutions/designing-connected-vehicle-platform#data_ingestion

http://www.eweek.com/big-data-and-analytics/google-touts-value-of-cloud-iot-core-for-analyzing-connected-car- data

https://cloud.google.com/solutions/iot/

NEW QUESTION 136

Case Study: 4 - Dress4Win case study

Company Overview

Dress4win is a web-based company that helps their users organize and manage their personal wardrobe using a website and mobile application. The company also cultivates an active social network that connects their users with designers and retailers. They monetize their services through advertising, e-commerce, referrals, and a freemium app model.

Company Background

Dress4win's application has grown from a few servers in the founder's garage to several hundred servers and appliances in a colocated data center. However, the capacity of their infrastructure is now insufficient for the application's rapid growth. Because of this growth and the company's desire to innovate faster, Dress4win is committing to a full migration to a public cloud.

Solution Concept

For the first phase of their migration to the cloud, Dress4win is considering moving their development and test environments. They are also considering building a disaster recovery site, because their current infrastructure is at a single location. They are not sure which components of their architecture they can migrate as is and which components they need to change before migrating them.

Existing Technical Environment

The Dress4win application is served out of a single data center location.

Databases:

MySQL - user data, inventory, static data

Redis - metadata, social graph, caching

Application servers:

Tomcat - Java micro-services

Nginx - static content

Apache Beam - Batch processing

Storage appliances:

iSCSI for VM hosts

Fiber channel SAN - MySQL databases

NAS - image storage, logs, backups

Apache Hadoop/Spark servers:

Data analysis

Real-time trending calculations

MQ servers:

Messaging

Social notifications

Events

Miscellaneous servers:

Jenkins, monitoring, bastion hosts, security scanners

Business Requirements

Build a reliable and reproducible environment with scaled parity of production. Improve security by defining and adhering to a set of security and Identity and Access Management (IAM) best practices for cloud.

Improve business agility and speed of innovation through rapid provisioning of new resources.

Analyze and optimize architecture for performance in the cloud. Migrate fully to the cloud if all other requirements are met.

Technical Requirements

Evaluate and choose an automation framework for provisioning resources in cloud. Support failover of the production environment to cloud during an emergency. Identify production services that can migrate to cloud to save capacity.

Use managed services whenever possible.

Encrypt data on the wire and at rest.

Support multiple VPN connections between the production data center and cloud environment.

CEO Statement

Our investors are concerned about our ability to scale and contain costs with our current infrastructure. They are also concerned that a new competitor could use a public cloud platform to offset their up-front investment and freeing them to focus on developing better features.

CTO Statement

We have invested heavily in the current infrastructure, but much of the equipment is approaching the end of its useful life. We are consistently waiting weeks for new gear to be racked before we can start new projects. Our traffic patterns are highest in the mornings and weekend evenings; during other times, 80% of our capacity is sitting idle.

CFO Statement

Our capital expenditure is now exceeding our quarterly projections. Migrating to the cloud will likely cause an initial increase in spending, but we expect to fully transition before our next hardware refresh cycle. Our total cost of ownership (TCO) analysis over the next 5 years puts a cloud strategy between 30 to 50% lower than our current model.

For this question, refer to the Dress4Win case study.

The Dress4Win security team has disabled external SSH access into production virtual machines (VMs) on Google Cloud Platform (GCP). The operations team needs to remotely manage the VMs, build and push Docker containers, and manage Google Cloud Storage objects. What can they do?

- A. Have the development team build an API service that allows the operations team to execute specific remote procedure calls to accomplish their tasks.

- B. Develop a new access request process that grants temporary SSH access to cloud VMs when an operations engineer needs to perform a task.

- C. Grant the operations engineers access to use Google Cloud Shell.

- D. Configure a VPN connection to GCP to allow SSH access to the cloud VMs.

Answer: D

NEW QUESTION 137

You have an outage in your Compute Engine managed instance group: all instance keep restarting after 5 seconds. You have a health check configured, but autoscaling is disabled. Your colleague, who is a Linux expert, offered to look into the issue. You need to make sure that he can access the VMs. What should you do?

- A. Disable autoscaling for the instance group. Add his SSH key to the project-wide SSH Keys

- B. Grant your colleague the IAM role of project Viewer

- C. Perform a rolling restart on the instance group

- D. Disable the health check for the instance group. Add his SSH key to the project-wide SSH keys

Answer: D

Explanation:

Explanation

https://cloud.google.com/compute/docs/instance-groups/autohealing-instances-in-migs Health checks used for autohealing should be conservative so they don't preemptively delete and recreate your instances. When an autohealer health check is too aggressive, the autohealer might mistake busy instances for failed instances and unnecessarily restart them, reducing availability

NEW QUESTION 138

You need to set up Microsoft SQL Server on GCP. Management requires that there's no downtime in case of a data center outage in any of the zones within a GCP region. What should you do?

- A. Set up SQL Server Always On Availability Groups using Windows Failover Clustering. Place nodes in different zones.

- B. Configure a Cloud Spanner instance with a regional instance configuration.

- C. Set up SQL Server on Compute Engine, using Always On Availability Groups using Windows Failover Clustering. Place nodes in different subnets.

- D. Configure a Cloud SQL instance with high availability enabled.

Answer: C

Explanation:

Explanation/Reference: https://cloud.google.com/solutions/sql-server-always-on-compute-engine

NEW QUESTION 139

To reduce costs, the Director of Engineering has required all developers to move their development infrastructure resources from on-premises virtual machines (VMs) to Google Cloud Platform. These resources go through multiple start/stop events during the day and require state to persist. You have been asked to design the process of running a development environment in Google Cloud while providing cost visibility to the finance department.

Which two steps should you take? Choose 2 answers.

- A. Use the - -auto-delete flag on all persistent disks and terminate the VM

- B. Store all state into local SSD, snapshot the persistent disks, and terminate the VM

- C. Store all state in Google Cloud Storage, snapshot the persistent disks, and terminate the VM

- D. Use Google BigQuery billing export and labels to associate cost to groups

- E. Use the - -no-auto-delete flag on all persistent disks and stop the VM

- F. Apply VM CPU utilization label and include it in the BigQuery billing export

Answer: B,F

Explanation:

C: Billing export to BigQuery enables you to export your daily usage and cost estimates automatically throughout the day to a BigQuery dataset you specify.

Labels applied to resources that generate usage metrics are forwarded to the billing system so that you can break down your billing charges based upon label criteria. For example, the Compute Engine service reports metrics on VM instances. If you deploy a project with 2,000 VMs, each of which is labeled distinctly, then only the first 1,000 label maps seen within the 1 hour window will be preserved.

E: You cannot stop an instance that has a local SSD attached. Instead, you must migrate your critical data off of the local SSD to a persistent disk or to another instance before you delete the instance completely.

You can stop an instance temporarily so you can come back to it at a later time. A stopped instance does not incur charges, but all of the resources that are attached to the instance will still be charged. Alternatively, if you are done using an instance, delete the instance and its resources to stop incurring charges.

References:

https://cloud.google.com/billing/docs/how-to/export-data-bigquery

https://cloud.google.com/compute/docs/instances/stopping-or-deleting-an-instance

NEW QUESTION 140

For this question, refer to the Mountkirk Games case study. You need to analyze and define the technical architecture for the database workloads for your company, Mountkirk Games. Considering the business and technical requirements, what should you do?

- A. Use Cloud SQL to replace MySQL, and use Cloud Spanner for historical data queries.

- B. Use Cloud Bigtable to replace MySQL, and use BigQuery for historical data queries.

- C. Use Cloud Bigtable for time series data, use Cloud Spanner for transactional data, and use BigQuery for historical data queries.

- D. Use Cloud SQL for time series data, and use Cloud Bigtable for historical data queries.

Answer: C

Explanation:

Explanation

https://cloud.google.com/bigtable/docs/schema-design-time-series

NEW QUESTION 141

......

Authentic Best resources for Professional-Cloud-Architect Online Practice Exam: https://www.actual4exams.com/Professional-Cloud-Architect-valid-dump.html

Professional-Cloud-Architect Test Engine Practice Exam: https://drive.google.com/open?id=1qMq3ZEbylOyoYe6zQofAt66UvpDZyUdO