Free CKS Questions for Linux Foundation CKS Exam [Mar-2024]

Validate your CKS Exam Preparation with CKS Practice Test (Online & Offline)

The CKS certification is a valuable credential for IT professionals who work with Kubernetes. Certified Kubernetes Security Specialist (CKS) certification demonstrates to potential employers that the candidate has the knowledge and skills needed to secure Kubernetes clusters and workloads. Certified Kubernetes Security Specialist (CKS) certification is also a great way for IT professionals to advance their careers and increase their earning potential. With the growing demand for Kubernetes experts, the CKS certification is a great way to stand out in a crowded job market.

The CKS exam is designed to test the knowledge and skills required to secure a Kubernetes cluster. CKS exam covers various topics such as Kubernetes architecture, network security, authentication and authorization, storage security, and cluster hardening. It also covers best practices and techniques for securing Kubernetes environments, including how to monitor and audit Kubernetes clusters for security vulnerabilities.

NEW QUESTION # 18

SIMULATION

Enable audit logs in the cluster, To Do so, enable the log backend, and ensure that

1. logs are stored at /var/log/kubernetes/kubernetes-logs.txt.

2. Log files are retained for 5 days.

3. at maximum, a number of 10 old audit logs files are retained.

Edit and extend the basic policy to log:

1. Cronjobs changes at RequestResponse

2. Log the request body of deployments changes in the namespace kube-system.

3. Log all other resources in core and extensions at the Request level.

4. Don't log watch requests by the "system:kube-proxy" on endpoints or

- A. Send us the Feedback on it.

Answer: A

NEW QUESTION # 19

On the Cluster worker node, enforce the prepared AppArmor profile

#include <tunables/global>

profile docker-nginx flags=(attach_disconnected,mediate_deleted) {

#include <abstractions/base>

network inet tcp,

network inet udp,

network inet icmp,

deny network raw,

deny network packet,

file,

umount,

deny /bin/** wl,

deny /boot/** wl,

deny /dev/** wl,

deny /etc/** wl,

deny /home/** wl,

deny /lib/** wl,

deny /lib64/** wl,

deny /media/** wl,

deny /mnt/** wl,

deny /opt/** wl,

deny /proc/** wl,

deny /root/** wl,

deny /sbin/** wl,

deny /srv/** wl,

deny /tmp/** wl,

deny /sys/** wl,

deny /usr/** wl,

audit /** w,

/var/run/nginx.pid w,

/usr/sbin/nginx ix,

deny /bin/dash mrwklx,

deny /bin/sh mrwklx,

deny /usr/bin/top mrwklx,

capability chown,

capability dac_override,

capability setuid,

capability setgid,

capability net_bind_service,

deny @{PROC}/* w, # deny write for all files directly in /proc (not in a subdir)

# deny write to files not in /proc/<number>/** or /proc/sys/**

deny @{PROC}/{[^1-9],[^1-9][^0-9],[^1-9s][^0-9y][^0-9s],[^1-9][^0-9][^0-9][^0-9]*}/** w, deny @{PROC}/sys/[^k]** w, # deny /proc/sys except /proc/sys/k* (effectively /proc/sys/kernel) deny @{PROC}/sys/kernel/{?,??,[^s][^h][^m]**} w, # deny everything except shm* in /proc/sys/kernel/ deny @{PROC}/sysrq-trigger rwklx, deny @{PROC}/mem rwklx, deny @{PROC}/kmem rwklx, deny @{PROC}/kcore rwklx, deny mount, deny /sys/[^f]*/** wklx, deny /sys/f[^s]*/** wklx, deny /sys/fs/[^c]*/** wklx, deny /sys/fs/c[^g]*/** wklx, deny /sys/fs/cg[^r]*/** wklx, deny /sys/firmware/** rwklx, deny /sys/kernel/security/** rwklx,

}

Edit the prepared manifest file to include the AppArmor profile.

apiVersion: v1

kind: Pod

metadata:

name: apparmor-pod

spec:

containers:

- name: apparmor-pod

image: nginx

Finally, apply the manifests files and create the Pod specified on it.

Verify: Try to use command ping, top, sh

- A. Send us your Feedback on this.

Answer: A

NEW QUESTION # 20

SIMULATION

Create a User named john, create the CSR Request, fetch the certificate of the user after approving it.

Create a Role name john-role to list secrets, pods in namespace john

Finally, Create a RoleBinding named john-role-binding to attach the newly created role john-role to the user john in the namespace john. To Verify: Use the kubectl auth CLI command to verify the permissions.

Answer:

Explanation:

se kubectl to create a CSR and approve it.

Get the list of CSRs:

kubectl get csr

Approve the CSR:

kubectl certificate approve myuser

Get the certificate

Retrieve the certificate from the CSR:

kubectl get csr/myuser -o yaml

here are the role and role-binding to give john permission to create NEW_CRD resource:

kubectl apply -f roleBindingJohn.yaml --as=john

rolebinding.rbac.authorization.k8s.io/john_external-rosource-rb created kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata:

name: john_crd

namespace: development-john

subjects:

- kind: User

name: john

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: crd-creation

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: crd-creation

rules:

- apiGroups: ["kubernetes-client.io/v1"]

resources: ["NEW_CRD"]

verbs: ["create, list, get"]

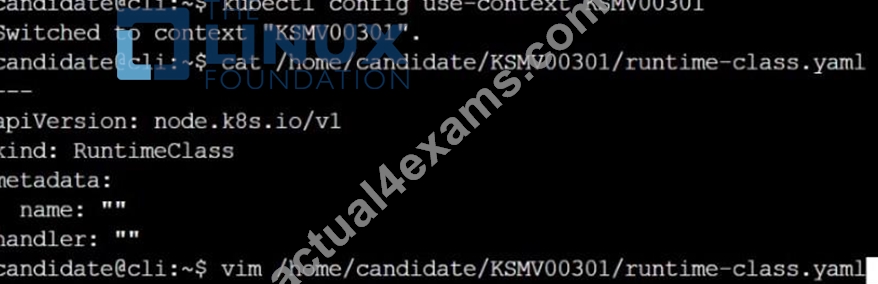

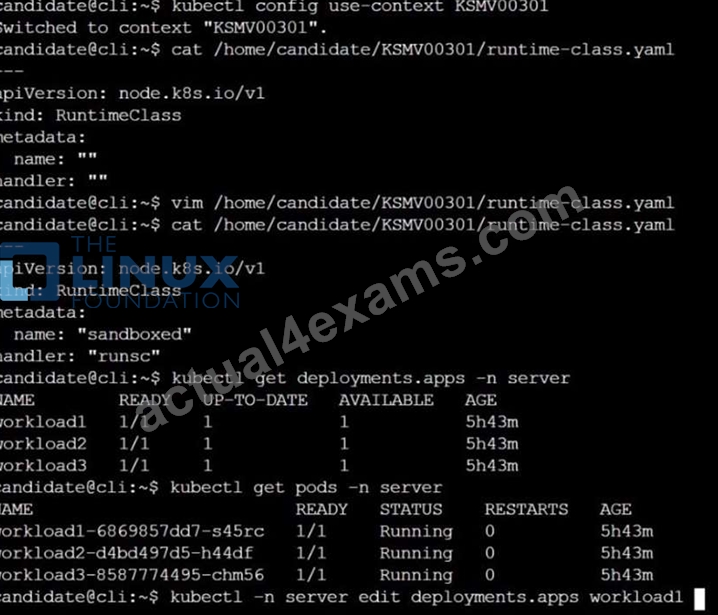

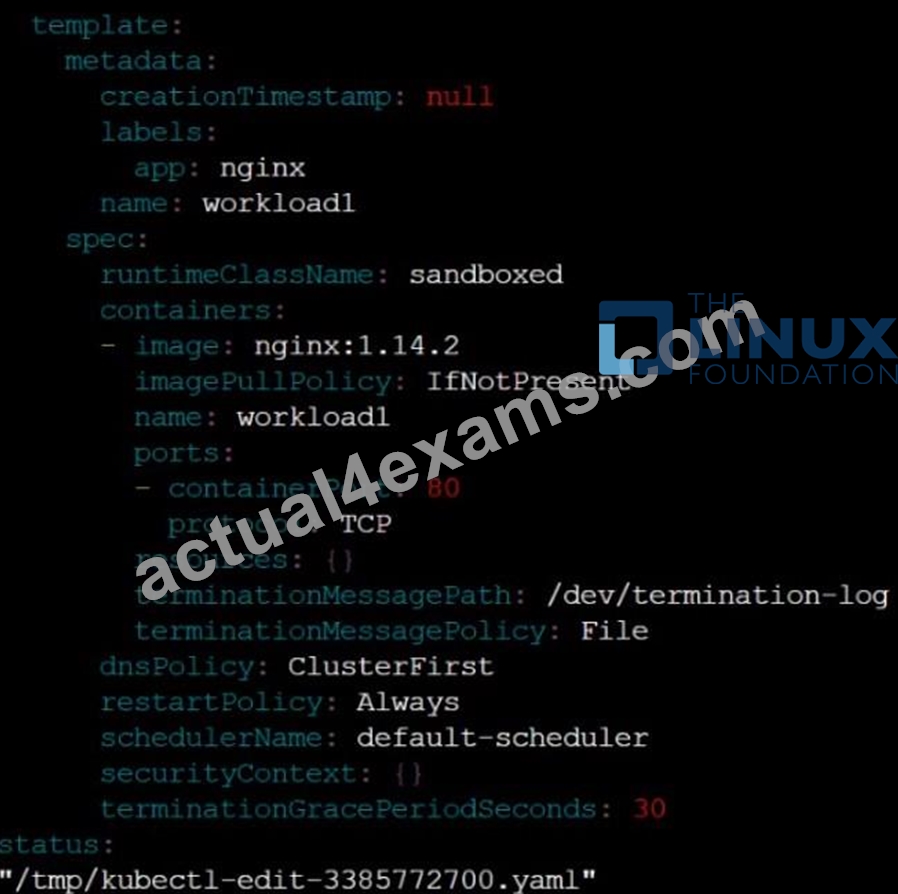

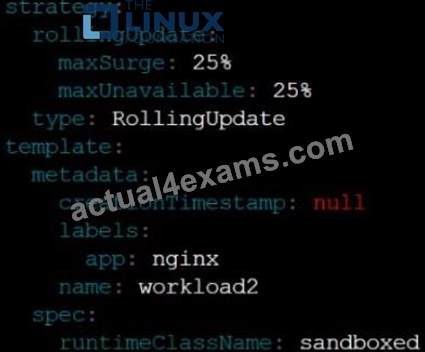

NEW QUESTION # 21

SIMULATION

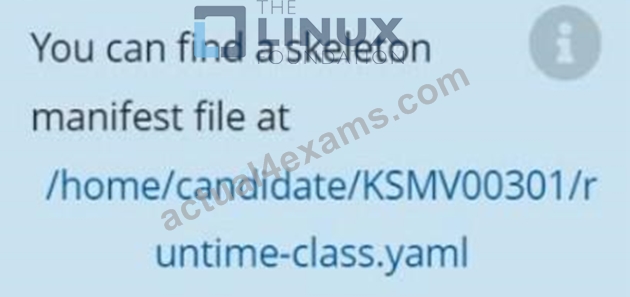

Create a RuntimeClass named untrusted using the prepared runtime handler named runsc.

Create a Pods of image alpine:3.13.2 in the Namespace default to run on the gVisor runtime class.

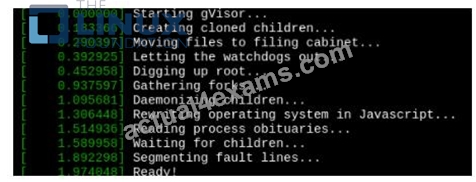

Verify: Exec the pods and run the dmesg, you will see output like this:-

- A. Send us your feedback on it.

Answer: A

NEW QUESTION # 22

Context: Cluster: prod Master node: master1 Worker node: worker1

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context prod

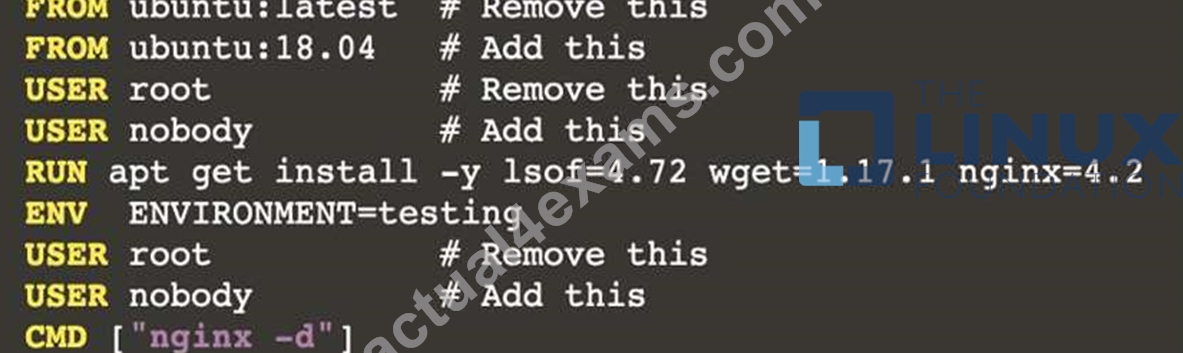

Task: Analyse and edit the given Dockerfile (based on the ubuntu:18:04 image) /home/cert_masters/Dockerfile fixing two instructions present in the file being prominent security/best-practice issues.

Analyse and edit the given manifest file /home/cert_masters/mydeployment.yaml fixing two fields present in the file being prominent security/best-practice issues.

Note: Don't add or remove configuration settings; only modify the existing configuration settings, so that two configuration settings each are no longer security/best-practice concerns. Should you need an unprivileged user for any of the tasks, use user nobody with user id 65535

Answer:

Explanation:

1. For Dockerfile: Fix the image version & user name in Dockerfile 2. For mydeployment.yaml : Fix security contexts Explanation

[desk@cli] $ vim /home/cert_masters/Dockerfile

FROM ubuntu:latest # Remove this

FROM ubuntu:18.04 # Add this

USER root # Remove this

USER nobody # Add this

RUN apt get install -y lsof=4.72 wget=1.17.1 nginx=4.2

ENV ENVIRONMENT=testing

USER root # Remove this

USER nobody # Add this

CMD ["nginx -d"]

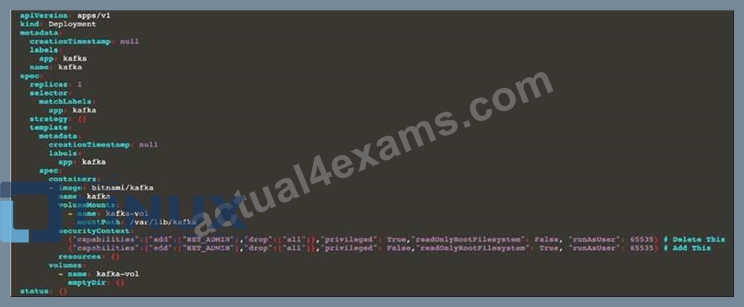

[desk@cli] $ vim /home/cert_masters/mydeployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: kafka

name: kafka

spec:

replicas: 1

selector:

matchLabels:

app: kafka

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: kafka

spec:

containers:

- image: bitnami/kafka

name: kafka

volumeMounts:

- name: kafka-vol

mountPath: /var/lib/kafka

securityContext:

{"capabilities":{"add":["NET_ADMIN"],"drop":["all"]},"privileged": True,"readOnlyRootFilesystem": False, "runAsUser": 65535} # Delete This

{"capabilities":{"add":["NET_ADMIN"],"drop":["all"]},"privileged": False,"readOnlyRootFilesystem": True, "runAsUser": 65535} # Add This resources: {} volumes:

- name: kafka-vol

emptyDir: {}

status: {}

Pictorial View: [desk@cli] $ vim /home/cert_masters/mydeployment.yaml

NEW QUESTION # 23

Cluster: dev

Master node: master1

Worker node: worker1

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context dev

Task:

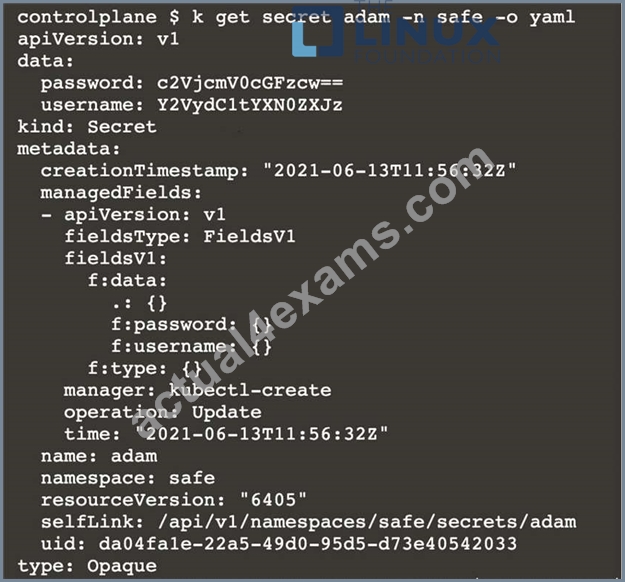

Retrieve the content of the existing secret named adam in the safe namespace.

Store the username field in a file names /home/cert-masters/username.txt, and the password field in a file named /home/cert-masters/password.txt.

1. You must create both files; they don't exist yet.

2. Do not use/modify the created files in the following steps, create new temporary files if needed.

Create a new secret names newsecret in the safe namespace, with the following content:

Username: dbadmin

Password: moresecurepas

Finally, create a new Pod that has access to the secret newsecret via a volume:

Namespace: safe

Pod name: mysecret-pod

Container name: db-container

Image: redis

Volume name: secret-vol

Mount path: /etc/mysecret

Answer:

Explanation:

1. Get the secret, decrypt it & save in files

k get secret adam -n safe -o yaml

2. Create new secret using --from-literal

[desk@cli] $k create secret generic newsecret -n safe --from-literal=username=dbadmin --from-literal=password=moresecurepass

3. Mount it as volume of db-container of mysecret-pod

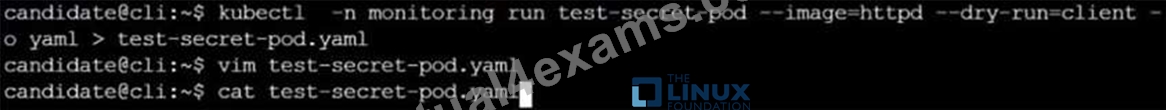

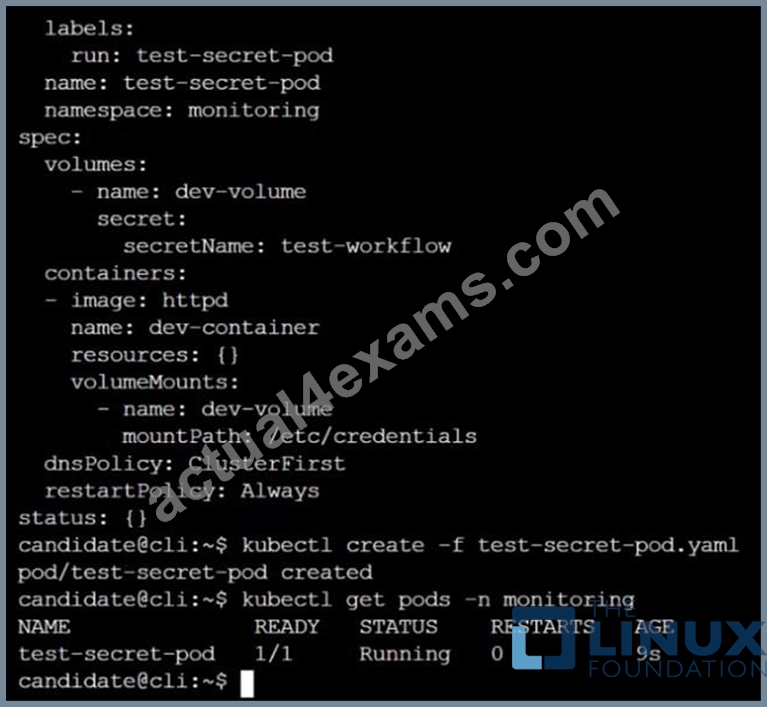

Explanation

[desk@cli] $k create secret generic newsecret -n safe --from-literal=username=dbadmin --from-literal=password=moresecurepass secret/newsecret created

[desk@cli] $vim /home/certs_masters/secret-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: mysecret-pod

namespace: safe

labels:

run: mysecret-pod

spec:

containers:

- name: db-container

image: redis

volumeMounts:

- name: secret-vol

mountPath: /etc/mysecret

readOnly: true

volumes:

- name: secret-vol

secret:

secretName: newsecret

[desk@cli] $ k apply -f /home/certs_masters/secret-pod.yaml

pod/mysecret-pod created

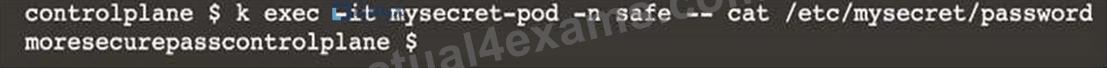

[desk@cli] $ k exec -it mysecret-pod -n safe - cat /etc/mysecret/username dbadmin

[desk@cli] $ k exec -it mysecret-pod -n safe - cat /etc/mysecret/password moresecurepas

NEW QUESTION # 24

SIMULATION

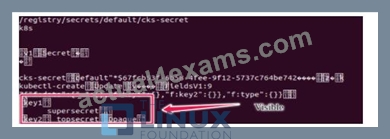

Secrets stored in the etcd is not secure at rest, you can use the etcdctl command utility to find the secret value for e.g:- ETCDCTL_API=3 etcdctl get /registry/secrets/default/cks-secret --cacert="ca.crt" --cert="server.crt" --key="server.key" Output

Using the Encryption Configuration, Create the manifest, which secures the resource secrets using the provider AES-CBC and identity, to encrypt the secret-data at rest and ensure all secrets are encrypted with the new configuration.

- A. Send us the Feedback on it.

Answer: A

NEW QUESTION # 25

Analyze and edit the given Dockerfile

FROM ubuntu:latest

RUN apt-get update -y

RUN apt-install nginx -y

COPY entrypoint.sh /

ENTRYPOINT ["/entrypoint.sh"]

USER ROOT

Fixing two instructions present in the file being prominent security best practice issues Analyze and edit the deployment manifest file apiVersion: v1 kind: Pod metadata:

name: security-context-demo-2

spec:

securityContext:

runAsUser: 1000

containers:

- name: sec-ctx-demo-2

image: gcr.io/google-samples/node-hello:1.0

securityContext:

runAsUser: 0

privileged: True

allowPrivilegeEscalation: false

Fixing two fields present in the file being prominent security best practice issues Don't add or remove configuration settings; only modify the existing configuration settings Whenever you need an unprivileged user for any of the tasks, use user test-user with the user id 5487

Answer:

Explanation:

FROM debian:latest

MAINTAINER [email protected]

# 1 - RUN

RUN apt-get update && DEBIAN_FRONTEND=noninteractive apt-get install -yq apt-utils RUN DEBIAN_FRONTEND=noninteractive apt-get install -yq htop RUN apt-get clean

# 2 - CMD

#CMD ["htop"]

#CMD ["ls", "-l"]

# 3 - WORKDIR and ENV

WORKDIR /root

ENV DZ version1

$ docker image build -t bogodevops/demo .

Sending build context to Docker daemon 3.072kB

Step 1/7 : FROM debian:latest

---> be2868bebaba

Step 2/7 : MAINTAINER [email protected]

---> Using cache

---> e2eef476b3fd

Step 3/7 : RUN apt-get update && DEBIAN_FRONTEND=noninteractive apt-get install -yq apt-utils

---> Using cache

---> 32fd044c1356

Step 4/7 : RUN DEBIAN_FRONTEND=noninteractive apt-get install -yq htop

---> Using cache

---> 0a5b514a209e

Step 5/7 : RUN apt-get clean

---> Using cache

---> 5d1578a47c17

Step 6/7 : WORKDIR /root

---> Using cache

---> 6b1c70e87675

Step 7/7 : ENV DZ version1

---> Using cache

---> cd195168c5c7

Successfully built cd195168c5c7

Successfully tagged bogodevops/demo:latest

NEW QUESTION # 26

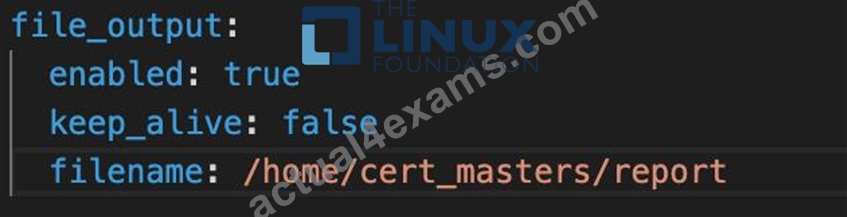

You must complete this task on the following cluster/nodes: Cluster: trace Master node: master Worker node: worker1 You can switch the cluster/configuration context using the following command: [desk@cli] $ kubectl config use-context trace Given: You may use Sysdig or Falco documentation. Task: Use detection tools to detect anomalies like processes spawning and executing something weird frequently in the single container belonging to Pod tomcat. Two tools are available to use: 1. falco 2. sysdig Tools are pre-installed on the worker1 node only. Analyse the container's behaviour for at least 40 seconds, using filters that detect newly spawning and executing processes. Store an incident file at /home/cert_masters/report, in the following format: [timestamp],[uid],[processName] Note: Make sure to store incident file on the cluster's worker node, don't move it to master node.

Answer:

Explanation:

$vim /etc/falco/falco_rules.local.yaml

- rule: Container Drift Detected (open+create)

desc: New executable created in a container due to open+create

condition: >

evt.type in (open,openat,creat) and

evt.is_open_exec=true and

container and

not runc_writing_exec_fifo and

not runc_writing_var_lib_docker and

not user_known_container_drift_activities and

evt.rawres>=0

output: >

%evt.time,%user.uid,%proc.name # Add this/Refer falco documentation

priority: ERROR

$kill -1 <PID of falco>

Explanation

[desk@cli] $ ssh node01 [node01@cli] $ vim /etc/falco/falco_rules.yaml search for Container Drift Detected & paste in falco_rules.local.yaml [node01@cli] $ vim /etc/falco/falco_rules.local.yaml

- rule: Container Drift Detected (open+create)

desc: New executable created in a container due to open+create

condition: >

evt.type in (open,openat,creat) and

evt.is_open_exec=true and

container and

not runc_writing_exec_fifo and

not runc_writing_var_lib_docker and

not user_known_container_drift_activities and

evt.rawres>=0

output: >

%evt.time,%user.uid,%proc.name # Add this/Refer falco documentation

priority: ERROR

[node01@cli] $ vim /etc/falco/falco.yaml

NEW QUESTION # 27

SIMULATION

Before Making any changes build the Dockerfile with tag base:v1

Now Analyze and edit the given Dockerfile(based on ubuntu 16:04)

Fixing two instructions present in the file, Check from Security Aspect and Reduce Size point of view.

Dockerfile:

FROM ubuntu:latest

RUN apt-get update -y

RUN apt install nginx -y

COPY entrypoint.sh /

RUN useradd ubuntu

ENTRYPOINT ["/entrypoint.sh"]

USER ubuntu

entrypoint.sh

#!/bin/bash

echo "Hello from CKS"

After fixing the Dockerfile, build the docker-image with the tag base:v2 To Verify: Check the size of the image before and after the build.

- A. Send us the Feedback on it.

Answer: A

NEW QUESTION # 28

Service is running on port 389 inside the system, find the process-id of the process, and stores the names of all the open-files inside the /candidate/KH77539/files.txt, and also delete the binary.

Answer:

Explanation:

root# netstat -ltnup

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 127.0.0.1:17600 0.0.0.0:* LISTEN 1293/dropbox tcp 0 0 127.0.0.1:17603 0.0.0.0:* LISTEN 1293/dropbox tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 575/sshd tcp 0 0 127.0.0.1:9393 0.0.0.0:* LISTEN 900/perl tcp 0 0 :::80 :::* LISTEN 9583/docker-proxy tcp 0 0 :::443 :::* LISTEN 9571/docker-proxy udp 0 0 0.0.0.0:68 0.0.0.0:* 8822/dhcpcd

...

root# netstat -ltnup | grep ':22'

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 575/sshd

The ss command is the replacement of the netstat command.

Now let's see how to use the ss command to see which process is listening on port 22:

root# ss -ltnup 'sport = :22'

Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port

tcp LISTEN 0 128 0.0.0.0:22 0.0.0.0:* users:("sshd",pid=575,fd=3))

NEW QUESTION # 29

Context

This cluster uses containerd as CRI runtime.

Containerd's default runtime handler is runc. Containerd has been prepared to support an additional runtime handler, runsc (gVisor).

Task

Create a RuntimeClass named sandboxed using the prepared runtime handler named runsc.

Update all Pods in the namespace server to run on gVisor.

Answer:

Explanation:

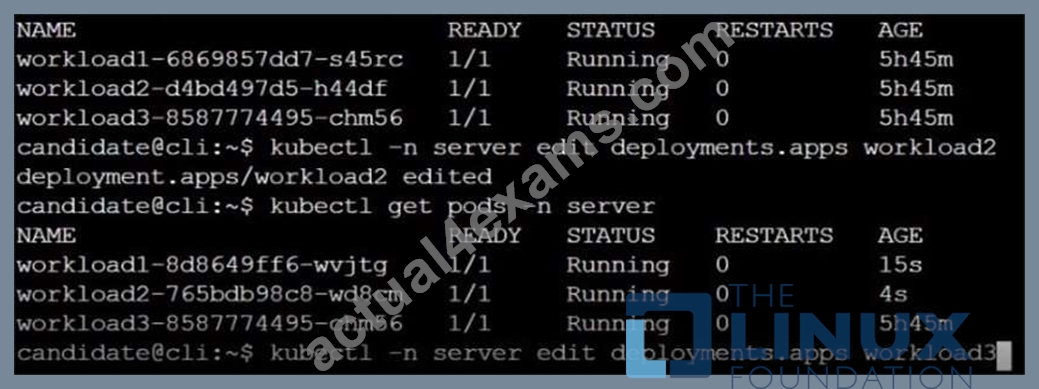

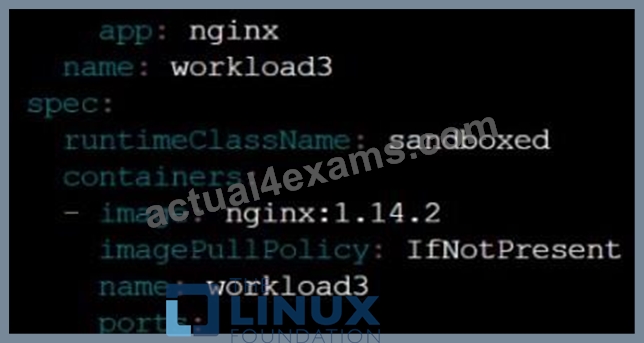

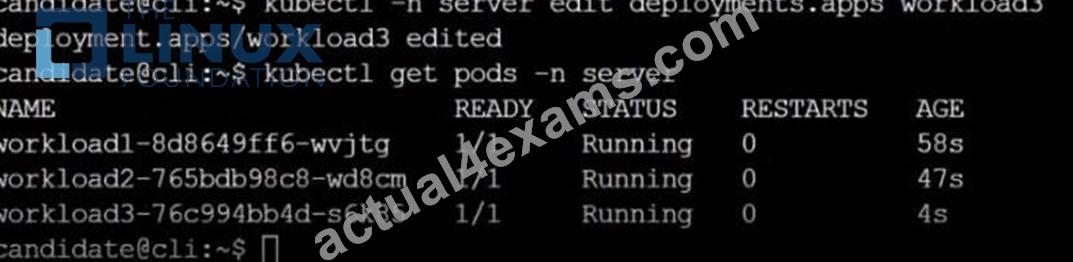

NEW QUESTION # 30

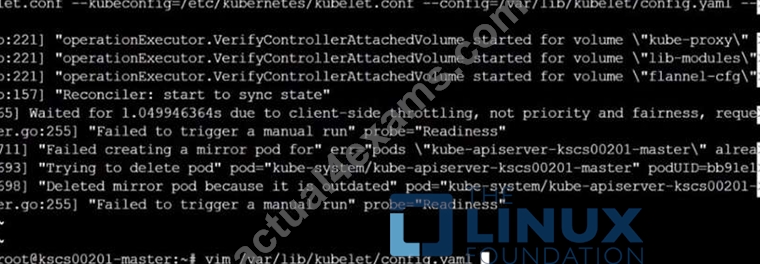

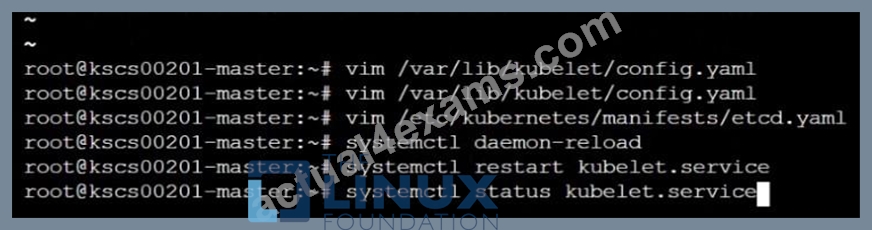

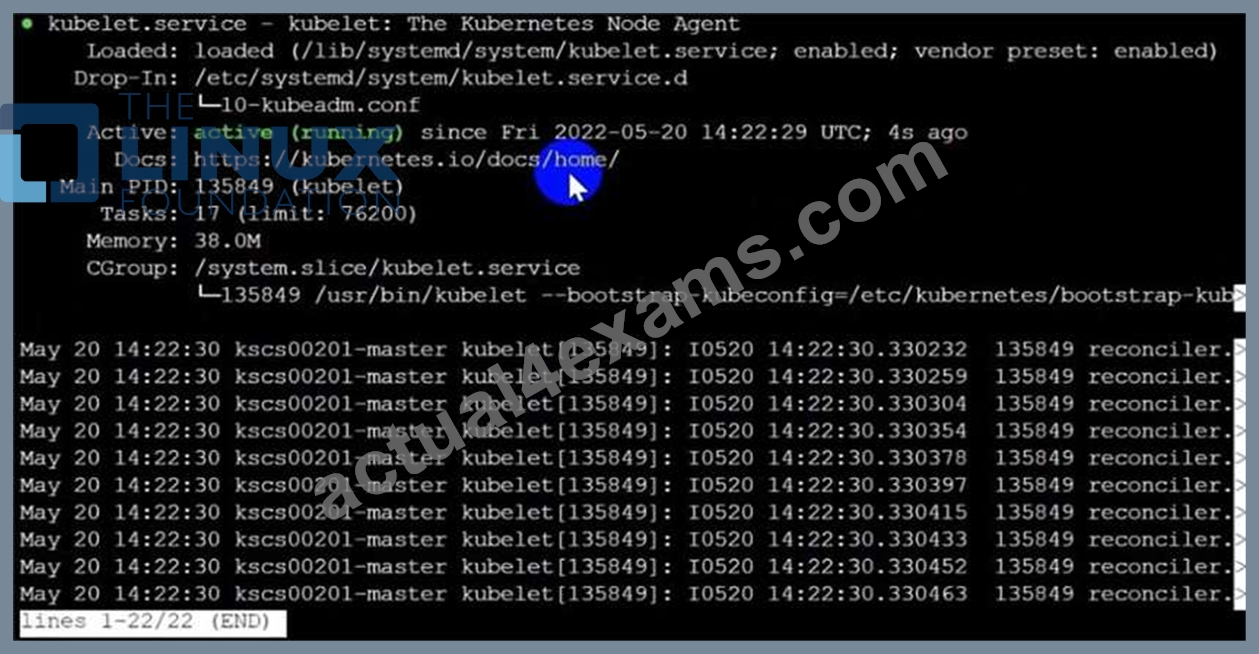

Fix all issues via configuration and restart the affected components to ensure the new setting takes effect.

Fix all of the following violations that were found against the API server:- a. Ensure the --authorization-mode argument includes RBAC b. Ensure the --authorization-mode argument includes Node c. Ensure that the --profiling argument is set to false Fix all of the following violations that were found against the Kubelet:- a. Ensure the --anonymous-auth argument is set to false.

b. Ensure that the --authorization-mode argument is set to Webhook.

Fix all of the following violations that were found against the ETCD:-

a. Ensure that the --auto-tls argument is not set to true

Hint: Take the use of Tool Kube-Bench

Answer:

Explanation:

API server:

Ensure the --authorization-mode argument includes RBAC

Turn on Role Based Access Control. Role Based Access Control (RBAC) allows fine-grained control over the operations that different entities can perform on different objects in the cluster. It is recommended to use the RBAC authorization mode.

Fix - Buildtime

Kubernetes

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

+ - kube-apiserver

+ - --authorization-mode=RBAC,Node

image: gcr.io/google_containers/kube-apiserver-amd64:v1.6.0

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 6443

scheme: HTTPS

initialDelaySeconds: 15

timeoutSeconds: 15

name: kube-apiserver-should-pass

resources:

requests:

cpu: 250m

volumeMounts:

- mountPath: /etc/kubernetes/

name: k8s

readOnly: true

- mountPath: /etc/ssl/certs

name: certs

- mountPath: /etc/pki

name: pki

hostNetwork: true

volumes:

- hostPath:

path: /etc/kubernetes

name: k8s

- hostPath:

path: /etc/ssl/certs

name: certs

- hostPath:

path: /etc/pki

name: pki

Ensure the --authorization-mode argument includes Node

Remediation: Edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml on the master node and set the --authorization-mode parameter to a value that includes Node.

--authorization-mode=Node,RBAC

Audit:

/bin/ps -ef | grep kube-apiserver | grep -v grep

Expected result:

'Node,RBAC' has 'Node'

Ensure that the --profiling argument is set to false

Remediation: Edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml on the master node and set the below parameter.

--profiling=false

Audit:

/bin/ps -ef | grep kube-apiserver | grep -v grep

Expected result:

'false' is equal to 'false'

Fix all of the following violations that were found against the Kubelet:- Ensure the --anonymous-auth argument is set to false.

Remediation: If using a Kubelet config file, edit the file to set authentication: anonymous: enabled to false. If using executable arguments, edit the kubelet service file /etc/systemd/system/kubelet.service.d/10-kubeadm.conf on each worker node and set the below parameter in KUBELET_SYSTEM_PODS_ARGS variable.

--anonymous-auth=false

Based on your system, restart the kubelet service. For example:

systemctl daemon-reload

systemctl restart kubelet.service

Audit:

/bin/ps -fC kubelet

Audit Config:

/bin/cat /var/lib/kubelet/config.yaml

Expected result:

'false' is equal to 'false'

2) Ensure that the --authorization-mode argument is set to Webhook.

Audit

docker inspect kubelet | jq -e '.[0].Args[] | match("--authorization-mode=Webhook").string' Returned Value: --authorization-mode=Webhook Fix all of the following violations that were found against the ETCD:- a. Ensure that the --auto-tls argument is not set to true Do not use self-signed certificates for TLS. etcd is a highly-available key value store used by Kubernetes deployments for persistent storage of all of its REST API objects. These objects are sensitive in nature and should not be available to unauthenticated clients. You should enable the client authentication via valid certificates to secure the access to the etcd service.

Fix - Buildtime

Kubernetes

apiVersion: v1

kind: Pod

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

creationTimestamp: null

labels:

component: etcd

tier: control-plane

name: etcd

namespace: kube-system

spec:

containers:

- command:

+ - etcd

+ - --auto-tls=true

image: k8s.gcr.io/etcd-amd64:3.2.18

imagePullPolicy: IfNotPresent

livenessProbe:

exec:

command:

- /bin/sh

- -ec

- ETCDCTL_API=3 etcdctl --endpoints=https://[192.168.22.9]:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt

--cert=/etc/kubernetes/pki/etcd/healthcheck-client.crt --key=/etc/kubernetes/pki/etcd/healthcheck-client.key get foo failureThreshold: 8 initialDelaySeconds: 15 timeoutSeconds: 15 name: etcd-should-fail resources: {} volumeMounts:

- mountPath: /var/lib/etcd

name: etcd-data

- mountPath: /etc/kubernetes/pki/etcd

name: etcd-certs

hostNetwork: true

priorityClassName: system-cluster-critical

volumes:

- hostPath:

path: /var/lib/etcd

type: DirectoryOrCreate

name: etcd-data

- hostPath:

path: /etc/kubernetes/pki/etcd

type: DirectoryOrCreate

name: etcd-certs

status: {}

Explanation:

NEW QUESTION # 31

SIMULATION

Given an existing Pod named test-web-pod running in the namespace test-system Edit the existing Role bound to the Pod's Service Account named sa-backend to only allow performing get operations on endpoints.

Create a new Role named test-system-role-2 in the namespace test-system, which can perform patch operations, on resources of type statefulsets.

Create a new RoleBinding named test-system-role-2-binding binding the newly created Role to the Pod's ServiceAccount sa-backend.

- A. Send us your feedback on this.

Answer: A

NEW QUESTION # 32

Create a new NetworkPolicy named deny-all in the namespace testing which denies all traffic of type ingress and egress traffic

Answer:

Explanation:

You can create a "default" isolation policy for a namespace by creating a NetworkPolicy that selects all pods but does not allow any ingress traffic to those pods.

---

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny-ingress

spec:

podSelector: {}

policyTypes:

- Ingress

You can create a "default" egress isolation policy for a namespace by creating a NetworkPolicy that selects all pods but does not allow any egress traffic from those pods.

---

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-all-egress

spec:

podSelector: {}

egress:

- {}

policyTypes:

- Egress

Default deny all ingress and all egress traffic

You can create a "default" policy for a namespace which prevents all ingress AND egress traffic by creating the following NetworkPolicy in that namespace.

---

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny-all

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

This ensures that even pods that aren't selected by any other NetworkPolicy will not be allowed ingress or egress traffic.

NEW QUESTION # 33

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context dev

A default-deny NetworkPolicy avoid to accidentally expose a Pod in a namespace that doesn't have any other NetworkPolicy defined.

Task: Create a new default-deny NetworkPolicy named deny-network in the namespace test for all traffic of type Ingress + Egress The new NetworkPolicy must deny all Ingress + Egress traffic in the namespace test.

Apply the newly created default-deny NetworkPolicy to all Pods running in namespace test.

You can find a skeleton manifests file at /home/cert_masters/network-policy.yaml

Answer:

Explanation:

master1 $ k get pods -n test --show-labels

NAME READY STATUS RESTARTS AGE LABELS

test-pod 1/1 Running 0 34s role=test,run=test-pod

testing 1/1 Running 0 17d run=testing

$ vim netpol.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-network

namespace: test

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

master1 $ k apply -f netpol.yaml

Explanation

controlplane $ k get pods -n test --show-labels

NAME READY STATUS RESTARTS AGE LABELS

test-pod 1/1 Running 0 34s role=test,run=test-pod

testing 1/1 Running 0 17d run=testing

master1 $ vim netpol1.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-network

namespace: test

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

master1 $ k apply -f netpol1.yaml Reference: https://kubernetes.io/docs/concepts/services-networking/network-policies/ Reference:

master1 $ k apply -f netpol1.yaml Reference: https://kubernetes.io/docs/concepts/services-networking/network-policies/ Explanation controlplane $ k get pods -n test --show-labels NAME READY STATUS RESTARTS AGE LABELS test-pod 1/1 Running 0 34s role=test,run=test-pod testing 1/1 Running 0 17d run=testing master1 $ vim netpol1.yaml apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata:

name: deny-network

namespace: test

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

master1 $ k apply -f netpol1.yaml Reference: https://kubernetes.io/docs/concepts/services-networking/network-policies/ master1 $ k apply -f netpol1.yaml Reference: https://kubernetes.io/docs/concepts/services-networking/network-policies/

NEW QUESTION # 34

SIMULATION

Fix all issues via configuration and restart the affected components to ensure the new setting takes effect.

Fix all of the following violations that were found against the API server:- a. Ensure the --authorization-mode argument includes RBAC b. Ensure the --authorization-mode argument includes Node c. Ensure that the --profiling argument is set to false Fix all of the following violations that were found against the Kubelet:- a. Ensure the --anonymous-auth argument is set to false.

b. Ensure that the --authorization-mode argument is set to Webhook.

Fix all of the following violations that were found against the ETCD:-

a. Ensure that the --auto-tls argument is not set to true

Hint: Take the use of Tool Kube-Bench

Answer:

Explanation:

API server:

Ensure the --authorization-mode argument includes RBAC

Turn on Role Based Access Control. Role Based Access Control (RBAC) allows fine-grained control over the operations that different entities can perform on different objects in the cluster. It is recommended to use the RBAC authorization mode.

Fix - Buildtime

Kubernetes

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

+ - kube-apiserver

+ - --authorization-mode=RBAC,Node

image: gcr.io/google_containers/kube-apiserver-amd64:v1.6.0

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 6443

scheme: HTTPS

initialDelaySeconds: 15

timeoutSeconds: 15

name: kube-apiserver-should-pass

resources:

requests:

cpu: 250m

volumeMounts:

- mountPath: /etc/kubernetes/

name: k8s

readOnly: true

- mountPath: /etc/ssl/certs

name: certs

- mountPath: /etc/pki

name: pki

hostNetwork: true

volumes:

- hostPath:

path: /etc/kubernetes

name: k8s

- hostPath:

path: /etc/ssl/certs

name: certs

- hostPath:

path: /etc/pki

name: pki

Ensure the --authorization-mode argument includes Node

Remediation: Edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml on the master node and set the --authorization-mode parameter to a value that includes Node.

--authorization-mode=Node,RBAC

Audit:

/bin/ps -ef | grep kube-apiserver | grep -v grep

Expected result:

'Node,RBAC' has 'Node'

Ensure that the --profiling argument is set to false

Remediation: Edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml on the master node and set the below parameter.

--profiling=false

Audit:

/bin/ps -ef | grep kube-apiserver | grep -v grep

Expected result:

'false' is equal to 'false'

Fix all of the following violations that were found against the Kubelet:- Ensure the --anonymous-auth argument is set to false.

Remediation: If using a Kubelet config file, edit the file to set authentication: anonymous: enabled to false. If using executable arguments, edit the kubelet service file /etc/systemd/system/kubelet.service.d/10-kubeadm.conf on each worker node and set the below parameter in KUBELET_SYSTEM_PODS_ARGS variable.

--anonymous-auth=false

Based on your system, restart the kubelet service. For example:

systemctl daemon-reload

systemctl restart kubelet.service

Audit:

/bin/ps -fC kubelet

Audit Config:

/bin/cat /var/lib/kubelet/config.yaml

Expected result:

'false' is equal to 'false'

2) Ensure that the --authorization-mode argument is set to Webhook.

Audit

docker inspect kubelet | jq -e '.[0].Args[] | match("--authorization-mode=Webhook").string' Returned Value: --authorization-mode=Webhook Fix all of the following violations that were found against the ETCD:- a. Ensure that the --auto-tls argument is not set to true Do not use self-signed certificates for TLS. etcd is a highly-available key value store used by Kubernetes deployments for persistent storage of all of its REST API objects. These objects are sensitive in nature and should not be available to unauthenticated clients. You should enable the client authentication via valid certificates to secure the access to the etcd service.

Fix - Buildtime

Kubernetes

apiVersion: v1

kind: Pod

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

creationTimestamp: null

labels:

component: etcd

tier: control-plane

name: etcd

namespace: kube-system

spec:

containers:

- command:

+ - etcd

+ - --auto-tls=true

image: k8s.gcr.io/etcd-amd64:3.2.18

imagePullPolicy: IfNotPresent

livenessProbe:

exec:

command:

- /bin/sh

- -ec

- ETCDCTL_API=3 etcdctl --endpoints=https://[192.168.22.9]:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt

--cert=/etc/kubernetes/pki/etcd/healthcheck-client.crt --key=/etc/kubernetes/pki/etcd/healthcheck-client.key get foo failureThreshold: 8 initialDelaySeconds: 15 timeoutSeconds: 15 name: etcd-should-fail resources: {} volumeMounts:

- mountPath: /var/lib/etcd

name: etcd-data

- mountPath: /etc/kubernetes/pki/etcd

name: etcd-certs

hostNetwork: true

priorityClassName: system-cluster-critical

volumes:

- hostPath:

path: /var/lib/etcd

type: DirectoryOrCreate

name: etcd-data

- hostPath:

path: /etc/kubernetes/pki/etcd

type: DirectoryOrCreate

name: etcd-certs

status: {}

NEW QUESTION # 35

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context prod-account

Context:

A Role bound to a Pod's ServiceAccount grants overly permissive permissions. Complete the following tasks to reduce the set of permissions.

Task:

Given an existing Pod named web-pod running in the namespace database.

1. Edit the existing Role bound to the Pod's ServiceAccount test-sa to only allow performing get operations, only on resources of type Pods.

2. Create a new Role named test-role-2 in the namespace database, which only allows performing update operations, only on resources of type statuefulsets.

3. Create a new RoleBinding named test-role-2-bind binding the newly created Role to the Pod's ServiceAccount.

Note: Don't delete the existing RoleBinding.

Answer:

Explanation:

$ k edit role test-role -n database

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: "2021-06-04T11:12:23Z"

name: test-role

namespace: database

resourceVersion: "1139"

selfLink: /apis/rbac.authorization.k8s.io/v1/namespaces/database/roles/test-role uid: 49949265-6e01-499c-94ac-5011d6f6a353 rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- * # Delete

- get # Fixed

$ k create role test-role-2 -n database --resource statefulset --verb update

$ k create rolebinding test-role-2-bind -n database --role test-role-2 --serviceaccount=database:test-sa Explanation

[desk@cli]$ k get pods -n database

NAME READY STATUS RESTARTS AGE LABELS

web-pod 1/1 Running 0 34s run=web-pod

[desk@cli]$ k get roles -n database

test-role

[desk@cli]$ k edit role test-role -n database

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: "2021-06-13T11:12:23Z"

name: test-role

namespace: database

resourceVersion: "1139"

selfLink: /apis/rbac.authorization.k8s.io/v1/namespaces/database/roles/test-role uid: 49949265-6e01-499c-94ac-5011d6f6a353 rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- "*" # Delete this

- get # Replace by this

[desk@cli]$ k create role test-role-2 -n database --resource statefulset --verb update role.rbac.authorization.k8s.io/test-role-2 created [desk@cli]$ k create rolebinding test-role-2-bind -n database --role test-role-2 --serviceaccount=database:test-sa rolebinding.rbac.authorization.k8s.io/test-role-2-bind created Reference: https://kubernetes.io/docs/reference/access-authn-authz/rbac/ role.rbac.authorization.k8s.io/test-role-2 created

[desk@cli]$ k create rolebinding test-role-2-bind -n database --role test-role-2 --serviceaccount=database:test-sa rolebinding.rbac.authorization.k8s.io/test-role-2-bind created

[desk@cli]$ k create role test-role-2 -n database --resource statefulset --verb update role.rbac.authorization.k8s.io/test-role-2 created [desk@cli]$ k create rolebinding test-role-2-bind -n database --role test-role-2 --serviceaccount=database:test-sa rolebinding.rbac.authorization.k8s.io/test-role-2-bind created Reference: https://kubernetes.io/docs/reference/access-authn-authz/rbac/

NEW QUESTION # 36

Create a RuntimeClass named gvisor-rc using the prepared runtime handler named runsc.

Create a Pods of image Nginx in the Namespace server to run on the gVisor runtime class

Answer:

Explanation:

Install the Runtime Class for gVisor

{ # Step 1: Install a RuntimeClass

cat <<EOF | kubectl apply -f -

apiVersion: node.k8s.io/v1beta1

kind: RuntimeClass

metadata:

name: gvisor

handler: runsc

EOF

}

Create a Pod with the gVisor Runtime Class

{ # Step 2: Create a pod

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: nginx-gvisor

spec:

runtimeClassName: gvisor

containers:

- name: nginx

image: nginx

EOF

}

Verify that the Pod is running

{ # Step 3: Get the pod

kubectl get pod nginx-gvisor -o wide

}

NEW QUESTION # 37

SIMULATION

Given an existing Pod named nginx-pod running in the namespace test-system, fetch the service-account-name used and put the content in /candidate/KSC00124.txt Create a new Role named dev-test-role in the namespace test-system, which can perform update operations, on resources of type namespaces.

Create a new RoleBinding named dev-test-role-binding, which binds the newly created Role to the Pod's ServiceAccount ( found in the Nginx pod running in namespace test-system).

- A. Sendusyourfeedbackonit

Answer: A

NEW QUESTION # 38

You must complete this task on the following cluster/nodes:

Cluster: apparmor

Master node: master

Worker node: worker1

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context apparmor

Given: AppArmor is enabled on the worker1 node.

Task:

On the worker1 node,

1. Enforce the prepared AppArmor profile located at: /etc/apparmor.d/nginx

2. Edit the prepared manifest file located at /home/cert_masters/nginx.yaml to apply the apparmor profile

3. Create the Pod using this manifest

Answer:

Explanation:

[desk@cli] $ ssh worker1

[worker1@cli] $apparmor_parser -q /etc/apparmor.d/nginx

[worker1@cli] $aa-status | grep nginx

nginx-profile-1

[worker1@cli] $ logout

[desk@cli] $vim nginx-deploy.yaml

Add these lines under metadata:

annotations: # Add this line

container.apparmor.security.beta.kubernetes.io/<container-name>: localhost/nginx-profile-1

[desk@cli] $kubectl apply -f nginx-deploy.yaml

Explanation

[desk@cli] $ ssh worker1

[worker1@cli] $apparmor_parser -q /etc/apparmor.d/nginx

[worker1@cli] $aa-status | grep nginx

nginx-profile-1

[worker1@cli] $ logout

[desk@cli] $vim nginx-deploy.yaml

[desk@cli] $kubectl apply -f nginx-deploy.yaml pod/nginx-deploy created Reference: https://kubernetes.io/docs/tutorials/clusters/apparmor/ pod/nginx-deploy created

[desk@cli] $kubectl apply -f nginx-deploy.yaml pod/nginx-deploy created Reference: https://kubernetes.io/docs/tutorials/clusters/apparmor/

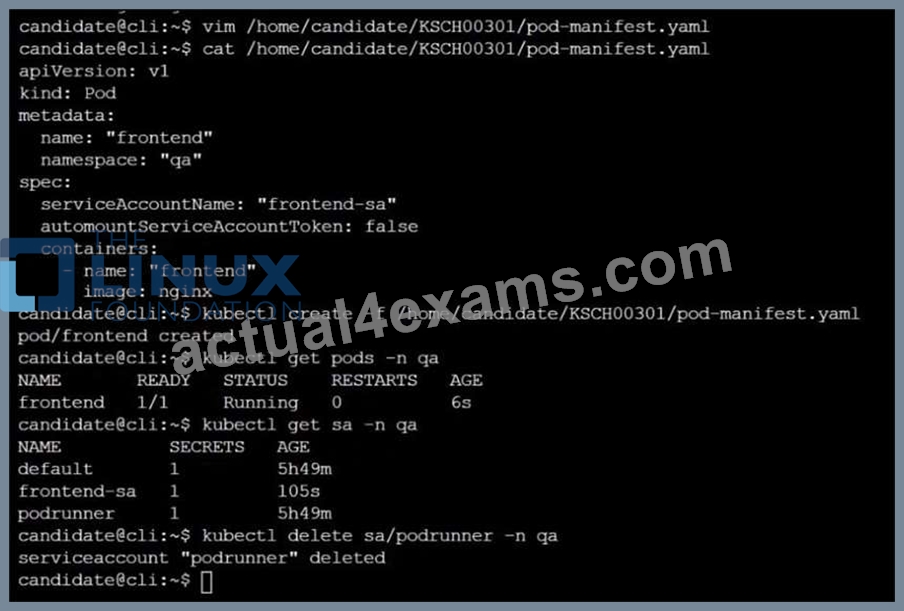

NEW QUESTION # 39

Context

Your organization's security policy includes:

ServiceAccounts must not automount API credentials

ServiceAccount names must end in "-sa"

The Pod specified in the manifest file /home/candidate/KSCH00301 /pod-m nifest.yaml fails to schedule because of an incorrectly specified ServiceAccount.

Complete the following tasks:

Task

1. Create a new ServiceAccount named frontend-sa in the existing namespace q a. Ensure the ServiceAccount does not automount API credentials.

2. Using the manifest file at /home/candidate/KSCH00301 /pod-manifest.yaml, create the Pod.

3. Finally, clean up any unused ServiceAccounts in namespace qa.

Answer:

Explanation:

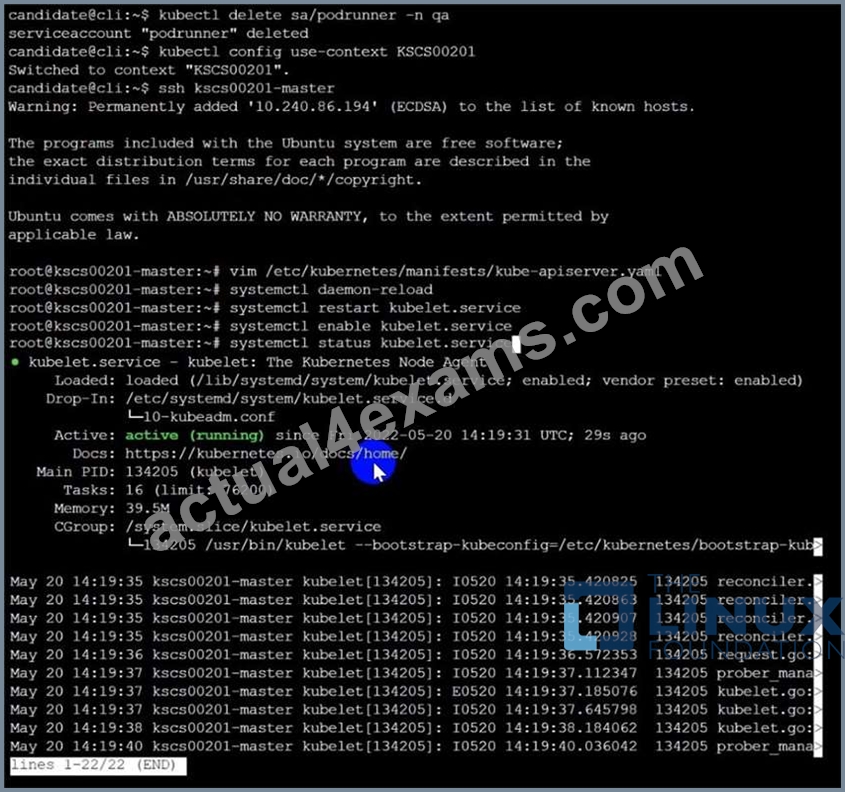

NEW QUESTION # 40

Cluster: dev

Master node: master1 Worker node: worker1

You can switch the cluster/configuration context using the following command: [desk@cli] $ kubectl config use-context dev Task: Retrieve the content of the existing secret named adam in the safe namespace.

Store the username field in a file names /home/cert-masters/username.txt, and the password field in a file named /home/cert-masters/password.txt.

1. You must create both files; they don't exist yet. 2. Do not use/modify the created files in the following steps, create new temporary files if needed.

Create a new secret names newsecret in the safe namespace, with the following content: Username: dbadmin Password: moresecurepas Finally, create a new Pod that has access to the secret newsecret via a volume:

Namespace: safe

Pod name: mysecret-pod

Container name: db-container

Image: redis

Volume name: secret-vol

Mount path: /etc/mysecret

Answer:

Explanation:

NEW QUESTION # 41

......

The CKS certification exam is a rigorous test of an IT professional’s knowledge and skills in Kubernetes security. CKS exam consists of 17 tasks that must be completed within two hours. The tasks are designed to test the candidate’s ability to identify and mitigate security risks in Kubernetes clusters and workloads. CKS exam is a hands-on test, which means that the candidate must demonstrate their ability to perform tasks in a live Kubernetes environment.

Check Real Linux Foundation CKS Exam Question for Free (2024): https://www.actual4exams.com/CKS-valid-dump.html

Get all the Information About Linux Foundation CKS Exam 2024 Practice Test Questions: https://drive.google.com/open?id=1JJFtOfnahc4E50w4fjc5SiI94EP0Wmt2