Verified Professional-Machine-Learning-Engineer dumps Q&As - 2021 Latest Professional-Machine-Learning-Engineer Download

Updated 100% Cover Real Professional-Machine-Learning-Engineer Exam Questions - 100% Pass Guarantee

NEW QUESTION 35

You are an ML engineer at a global shoe store. You manage the ML models for the company's website. You are asked to build a model that will recommend new products to the user based on their purchase behavior and similarity with other users. What should you do?

- A. Build a collaborative-based filtering model

- B. Build a regression model using the features as predictors

- C. Build a knowledge-based filtering model

- D. Build a classification model

Answer: A

NEW QUESTION 36

A financial services company is building a robust serverless data lake on Amazon S3. The data lake should be flexible and meet the following requirements:

* Support querying old and new data on Amazon S3 through Amazon Athena and Amazon Redshift Spectrum.

* Support event-driven ETL pipelines

* Provide a quick and easy way to understand metadata

Which approach meets these requirements?

- A. Use an AWS Glue crawler to crawl S3 data, an AWS Lambda function to trigger an AWS Batch job, and an external Apache Hive metastore to search and discover metadata.

- B. Use an AWS Glue crawler to crawl S3 data, an Amazon CloudWatch alarm to trigger an AWS Batch job, and an AWS Glue Data Catalog to search and discover metadata.

- C. Use an AWS Glue crawler to crawl S3 data, an AWS Lambda function to trigger an AWS Glue ETL job, and an AWS Glue Data catalog to search and discover metadata.

- D. Use an AWS Glue crawler to crawl S3 data, an Amazon CloudWatch alarm to trigger an AWS Glue ETL job, and an external Apache Hive metastore to search and discover metadata.

Answer: A

NEW QUESTION 37

Your team is building an application for a global bank that will be used by millions of customers. You built a forecasting model that predicts customers1 account balances 3 days in the future. Your team will use the results in a new feature that will notify users when their account balance is likely to drop below $25. How should you serve your predictions?

- A. 1 Build a notification system on Firebase

2. Register each user with a user ID on the Firebase Cloud Messaging server, which sends a notification when your model predicts that a user's account balance will drop below the $25 threshold - B. 1. Create a Pub/Sub topic for each user

2. Deploy an application on the App Engine standard environment that sends a notification when your model predicts that a user's account balance will drop below the $25 threshold - C. 1. Build a notification system on Firebase

2. Register each user with a user ID on the Firebase Cloud Messaging server, which sends a notification when the average of all account balance predictions drops below the $25 threshold - D. 1. Create a Pub/Sub topic for each user

2 Deploy a Cloud Function that sends a notification when your model predicts that a user's account balance will drop below the $25 threshold.

Answer: D

NEW QUESTION 38

A data scientist has developed a machine learning translation model for English to Japanese by using Amazon SageMaker's built-in seq2seq algorithm with 500,000 aligned sentence pairs. While testing with sample sentences, the data scientist finds that the translation quality is reasonable for an example as short as five words. However, the quality becomes unacceptable if the sentence is 100 words long.

Which action will resolve the problem?

- A. Change preprocessing to use n-grams.

- B. Adjust hyperparameters related to the attention mechanism.

- C. Choose a different weight initialization type.

- D. Add more nodes to the recurrent neural network (RNN) than the largest sentence's word count.

Answer: D

NEW QUESTION 39

You work for an online retail company that is creating a visual search engine. You have set up an end-to-end ML pipeline on Google Cloud to classify whether an image contains your company's product. Expecting the release of new products in the near future, you configured a retraining functionality in the pipeline so that new data can be fed into your ML models. You also want to use Al Platform's continuous evaluation service to ensure that the models have high accuracy on your test data set. What should you do?

- A. Update your test dataset with images of the newer products when your evaluation metrics drop below a pre-decided threshold.

- B. Extend your test dataset with images of the newer products when they are introduced to retraining

- C. Keep the original test dataset unchanged even if newer products are incorporated into retraining

- D. Replace your test dataset with images of the newer products when they are introduced to retraining.

Answer: D

NEW QUESTION 40

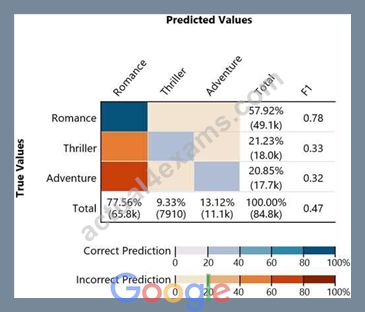

Given the following confusion matrix for a movie classification model, what is the true class frequency for Romance and the predicted class frequency for Adventure?

- A. The true class frequency for Romance is 77.56% and the predicted class frequency for Adventure is

20.85% - B. The true class frequency for Romance is 57.92% and the predicted class frequency for Adventure is

13.12% - C. The true class frequency for Romance is 0.78 and the predicted class frequency for Adventure is (0.47-

0.32) - D. The true class frequency for Romance is 77.56% * 0.78 and the predicted class frequency for Adventure is

20.85%*0.32

Answer: B

NEW QUESTION 41

A Machine Learning Specialist is packaging a custom ResNet model into a Docker container so the company can leverage Amazon SageMaker for training. The Specialist is using Amazon EC2 P3 instances to train the model and needs to properly configure the Docker container to leverage the NVIDIA GPUs.

What does the Specialist need to do?

- A. Build the Docker container to be NVIDIA-Docker compatible.

- B. Set the GPU flag in the Amazon SageMaker CreateTrainingJob request body.

- C. Organize the Docker container's file structure to execute on GPU instances.

- D. Bundle the NVIDIA drivers with the Docker image.

Answer: D

NEW QUESTION 42

You work for an online travel agency that also sells advertising placements on its website to other companies.

You have been asked to predict the most relevant web banner that a user should see next. Security is important to your company. The model latency requirements are 300ms@p99, the inventory is thousands of web banners, and your exploratory analysis has shown that navigation context is a good predictor.

You want to Implement the simplest solution. How should you configure the prediction pipeline?

- A. Embed the client on the website, deploy the gateway on App Engine, and then deploy the model on AI Platform Prediction.

- B. Embed the client on the website, deploy the gateway on App Engine, deploy the database on Cloud Bigtable for writing and for reading the user's navigation context, and then deploy the model on AI Platform Prediction.

- C. Embed the client on the website, and then deploy the model on AI Platform Prediction.

- D. Embed the client on the website, deploy the gateway on App Engine, deploy the database on Memorystore for writing and for reading the user's navigation context, and then deploy the model on Google Kubernetes Engine.

Answer: A

NEW QUESTION 43

You need to train a computer vision model that predicts the type of government ID present in a given image using a GPU-powered virtual machine on Compute Engine. You use the following parameters:

* Optimizer: SGD

* Image shape = 224x224

* Batch size = 64

* Epochs = 10

* Verbose = 2

During training you encounter the following error: ResourceExhaustedError: out of Memory (oom) when allocating tensor. What should you do?

- A. Reduce the image shape

- B. Reduce the batch size

- C. Change the learning rate

- D. Change the optimizer

Answer: B

NEW QUESTION 44

A company is using Amazon Textract to extract textual data from thousands of scanned text-heavy legal documents daily. The company uses this information to process loan applications automatically. Some of the documents fail business validation and are returned to human reviewers, who investigate the errors. This activity increases the time to process the loan applications.

What should the company do to reduce the processing time of loan applications?

- A. Configure Amazon Textract to route low-confidence predictions to Amazon Augmented AI (Amazon A2I).

Perform a manual review on those words before performing a business validation. - B. Use Amazon Rekognition's feature to detect text in an image to extract the data from scanned images. Use this information to process the loan applications.

- C. Configure Amazon Textract to route low-confidence predictions to Amazon SageMaker Ground Truth.

Perform a manual review on those words before performing a business validation. - D. Use an Amazon Textract synchronous operation instead of an asynchronous operation.

Answer: A

NEW QUESTION 45

This graph shows the training and validation loss against the epochs for a neural network.

The network being trained is as follows:

* Two dense layers, one output neuron

* 100 neurons in each layer

* 100 epochs

* Random initialization of weights

Which technique can be used to improve model performance in terms of accuracy in the validation set?

- A. Early stopping

- B. Adding another layer with the 100 neurons

- C. Increasing the number of epochs

- D. Random initialization of weights with appropriate seed

Answer: C

NEW QUESTION 46

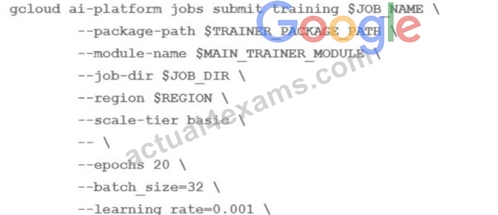

You are training an LSTM-based model on Al Platform to summarize text using the following job submission script:

You want to ensure that training time is minimized without significantly compromising the accuracy of your model. What should you do?

- A. Modify the batch size' parameter

- B. Modify the 'learning rate' parameter

- C. Modify the 'epochs' parameter

- D. Modify the 'scale-tier' parameter

Answer: C

NEW QUESTION 47

Your team is building a convolutional neural network (CNN)-based architecture from scratch. The preliminary experiments running on your on-premises CPU-only infrastructure were encouraging, but have slow convergence. You have been asked to speed up model training to reduce time-to-market. You want to experiment with virtual machines (VMs) on Google Cloud to leverage more powerful hardware. Your code does not include any manual device placement and has not been wrapped in Estimator model-level abstraction. Which environment should you train your model on?

- A. AVM on Compute Engine and 1 TPU with all dependencies installed manually.

- B. AVM on Compute Engine and 8 GPUs with all dependencies installed manually.

- C. A Deep Learning VM with an n1-standard-2 machine and 1 GPU with all libraries pre-installed.

- D. A Deep Learning VM with more powerful CPU e2-highcpu-16 machines with all libraries pre-installed.

Answer: A

NEW QUESTION 48

You have trained a deep neural network model on Google Cloud. The model has low loss on the training data, but is performing worse on the validation dat a. You want the model to be resilient to overfitting. Which strategy should you use when retraining the model?

- A. Run a hyperparameter tuning job on Al Platform to optimize for the L2 regularization and dropout parameters

- B. Run a hyperparameter tuning job on Al Platform to optimize for the learning rate, and increase the number of neurons by a factor of 2.

- C. Apply a dropout parameter of 0 2, and decrease the learning rate by a factor of 10

- D. Apply a 12 regularization parameter of 0.4, and decrease the learning rate by a factor of 10.

Answer: C

NEW QUESTION 49

You have been asked to develop an input pipeline for an ML training model that processes images from disparate sources at a low latency. You discover that your input data does not fit in memory. How should you create a dataset following Google-recommended best practices?

- A. Convert the images Into TFRecords, store the images in Cloud Storage, and then use the tf. data API to read the images for training

- B. Convert the images to tf .Tensor Objects, and then run Dataset. from_tensor_slices{).

- C. Convert the images to tf .Tensor Objects, and then run tf. data. Dataset. from_tensors ().

- D. Create a tf.data.Dataset.prefetch transformation

Answer: A

NEW QUESTION 50

A Machine Learning Specialist is training a model to identify the make and model of vehicles in images. The Specialist wants to use transfer learning and an existing model trained on images of general objects. The Specialist collated a large custom dataset of pictures containing different vehicle makes and models.

What should the Specialist do to initialize the model to re-train it with the custom data?

- A. Initialize the model with pre-trained weights in all layers including the last fully connected layer.

- B. Initialize the model with random weights in all layers including the last fully connected layer.

- C. Initialize the model with random weights in all layers and replace the last fully connected layer.

- D. Initialize the model with pre-trained weights in all layers and replace the last fully connected layer.

Answer: D

NEW QUESTION 51

A Data Scientist needs to migrate an existing on-premises ETL process to the cloud. The current process runs at regular time intervals and uses PySpark to combine and format multiple large data sources into a single consolidated output for downstream processing.

The Data Scientist has been given the following requirements to the cloud solution:

* Combine multiple data sources.

* Reuse existing PySpark logic.

* Run the solution on the existing schedule.

* Minimize the number of servers that will need to be managed.

Which architecture should the Data Scientist use to build this solution?

- A. Write the raw data to Amazon S3. Create an AWS Glue ETL job to perform the ETL processing against the input data. Write the ETL job in PySpark to leverage the existing logic. Create a new AWS Glue trigger to trigger the ETL job based on the existing schedule. Configure the output target of the ETL job to write to a

"processed" location in Amazon S3 that is accessible for downstream use. - B. Write the raw data to Amazon S3. Schedule an AWS Lambda function to submit a Spark step to a persistent Amazon EMR cluster based on the existing schedule. Use the existing PySpark logic to run the ETL job on the EMR cluster. Output the results to a "processed" location in Amazon S3 that is accessible for downstream use.

- C. Use Amazon Kinesis Data Analytics to stream the input data and perform real-time SQL queries against the stream to carry out the required transformations within the stream. Deliver the output results to a

"processed" location in Amazon S3 that is accessible for downstream use. - D. Write the raw data to Amazon S3. Schedule an AWS Lambda function to run on the existing schedule and process the input data from Amazon S3. Write the Lambda logic in Python and implement the existing PySpark logic to perform the ETL process. Have the Lambda function output the results to a "processed" location in Amazon S3 that is accessible for downstream use.

Answer: C

Explanation:

Explanation

NEW QUESTION 52

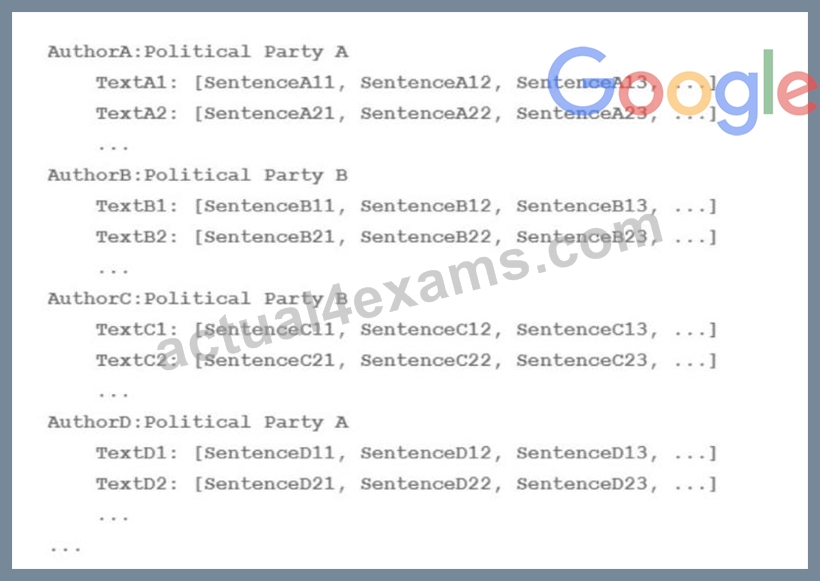

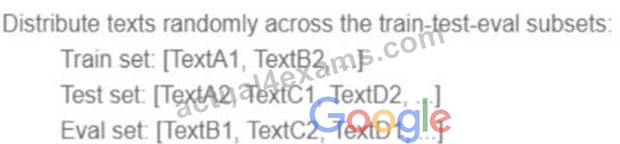

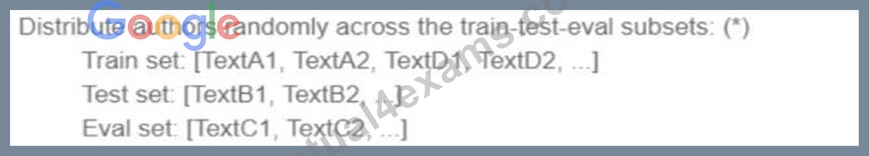

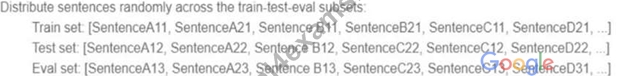

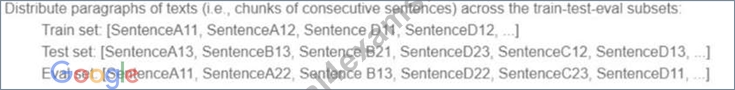

Your team is working on an NLP research project to predict political affiliation of authors based on articles they have written. You have a large training dataset that is structured like this:

A)

B)

C)

D)

- A. Option B

- B. Option C

- C. Option A

- D. Option D

Answer: D

NEW QUESTION 53

A Machine Learning Specialist is building a model that will perform time series forecasting using Amazon SageMaker. The Specialist has finished training the model and is now planning to perform load testing on the endpoint so they can configure Auto Scaling for the model variant.

Which approach will allow the Specialist to review the latency, memory utilization, and CPU utilization during the load test?

- A. Generate an Amazon CloudWatch dashboard to create a single view for the latency, memory utilization, and CPU utilization metrics that are outputted by Amazon SageMaker.

- B. Send Amazon CloudWatch Logs that were generated by Amazon SageMaker to Amazon ES and use Kibana to query and visualize the log data.

- C. Build custom Amazon CloudWatch Logs and then leverage Amazon ES and Kibana to query and visualize the log data as it is generated by Amazon SageMaker.

- D. Review SageMaker logs that have been written to Amazon S3 by leveraging Amazon Athena and Amazon QuickSight to visualize logs as they are being produced.

Answer: A

Explanation:

Explanation/Reference: https://docs.aws.amazon.com/sagemaker/latest/dg/monitoring-cloudwatch.html

NEW QUESTION 54

An online reseller has a large, multi-column dataset with one column missing 30% of its data. A Machine Learning Specialist believes that certain columns in the dataset could be used to reconstruct the missing data.

Which reconstruction approach should the Specialist use to preserve the integrity of the dataset?

- A. Listwise deletion

- B. Last observation carried forward

- C. Multiple imputation

- D. Mean substitution

Answer: C

Explanation:

Explanation/Reference: https://worldwidescience.org/topicpages/i/imputing+missing+values.html

NEW QUESTION 55

You need to build classification workflows over several structured datasets currently stored in BigQuery. Because you will be performing the classification several times, you want to complete the following steps without writing code: exploratory data analysis, feature selection, model building, training, and hyperparameter tuning and serving. What should you do?

- A. Configure AutoML Tables to perform the classification task

- B. Run a BigQuery ML task to perform logistic regression for the classification

- C. Use Al Platform Notebooks to run the classification model with pandas library

- D. Use Al Platform to run the classification model job configured for hyperparameter tuning

Answer: B

Explanation:

BigQuery ML supports supervised learning with the logistic regression model type.

NEW QUESTION 56

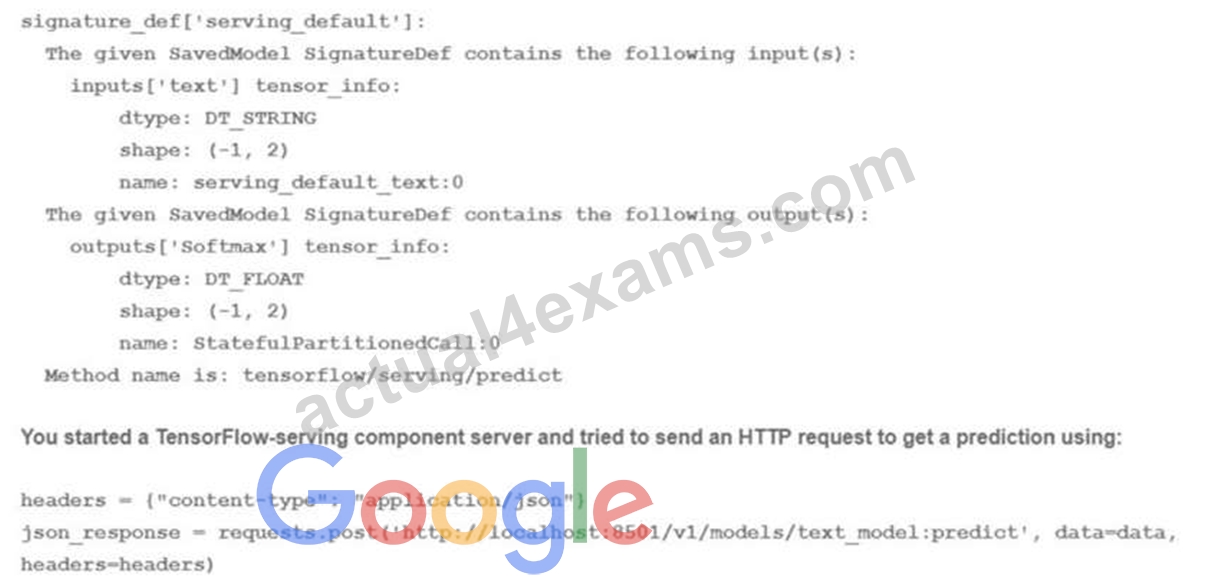

You trained a text classification model. You have the following SignatureDefs:

What is the correct way to write the predict request?

- A. data = json dumps({"signature_name": "serving_default"! "instances": [['a', 'b', "c", 'd', 'e', 'f']]})

- B. data = json.dumps({"signature_name": "serving_default'\ "instances": [fab', 'be1, 'cd']]})

- C. data = json dumps({"signature_name": f,serving_default", "instances": [['a', 'b'], [c\ 'd'], ['e\ T]]})

- D. data = json.dumps({"signature_name": "serving_default, "instances": [['a', 'b\ 'c'1, [d\ 'e\ T]]})

Answer: A

NEW QUESTION 57

A large company has developed a BI application that generates reports and dashboards using data collected from various operational metrics. The company wants to provide executives with an enhanced experience so they can use natural language to get data from the reports. The company wants the executives to be able ask questions using written and spoken interfaces.

Which combination of services can be used to build this conversational interface? (Choose three.)

- A. Amazon Comprehend

- B. Alexa for Business

- C. Amazon Transcribe

- D. Amazon Lex

- E. Amazon Polly

- F. Amazon Connect

Answer: A,C,F

NEW QUESTION 58

A Data Engineer needs to build a model using a dataset containing customer credit card information How can the Data Engineer ensure the data remains encrypted and the credit card information is secure?

- A. Use an IAM policy to encrypt the data on the Amazon S3 bucket and Amazon Kinesis to automatically discard credit card numbers and insert fake credit card numbers.

- B. Use an Amazon SageMaker launch configuration to encrypt the data once it is copied to the SageMaker instance in a VPC. Use the SageMaker principal component analysis (PCA) algorithm to reduce the length of the credit card numbers.

- C. Use AWS KMS to encrypt the data on Amazon S3 and Amazon SageMaker, and redact the credit card numbers from the customer data with AWS Glue.

- D. Use a custom encryption algorithm to encrypt the data and store the data on an Amazon SageMaker instance in a VPC. Use the SageMaker DeepAR algorithm to randomize the credit card numbers.

Answer: B

Explanation:

Explanation/Reference: https://docs.aws.amazon.com/sagemaker/latest/dg/pca.html

NEW QUESTION 59

......

Use Real Dumps - 100% Free Professional-Machine-Learning-Engineer Exam Dumps: https://www.actual4exams.com/Professional-Machine-Learning-Engineer-valid-dump.html

Realistic Professional-Machine-Learning-Engineer Dumps Latest Practice Tests Dumps: https://drive.google.com/open?id=1XS5R_jKWp1wLlu4Rto-0r1ie5Vfg1e52